How I built a gesture-controlled game in scratch using a neural network trained on my webcam

Teachable Machine + MIT Scratch

In the early days of the internet, you might recall that quirky little dinosaur that popped up on Google Chrome whenever the internet went down. A lonely creature, running endlessly across a desert, jumping over cacti and ducking under birds. That simple game captured the imagination of millions.

Last week, I decided to recreate a version of that game. But I wanted to add a new twist: instead of using arrow keys to make the character jump, I wanted to control it with my hand gestures. Specifically, I wanted the character to jump when I raised my left hand in front of the camera.

I did not write a single line of code. Instead, I used a Scratch-like block-based programming platform built by MIT and connected it with an AI model trained using Google’s Teachable Machine.

Let me walk you through how it all came together and how you can build this in 20 minutes.

The “Playground”: Where the game is built

Rather than use the regular Scratch interface, I used a platform called Playground by MIT RAISE. It offers the same block-style interface as Scratch but with one important addition- it allows you to import models from Teachable Machine and interact with real-world input like video and gestures.

Playground: https://playground.raise.mit.edu/

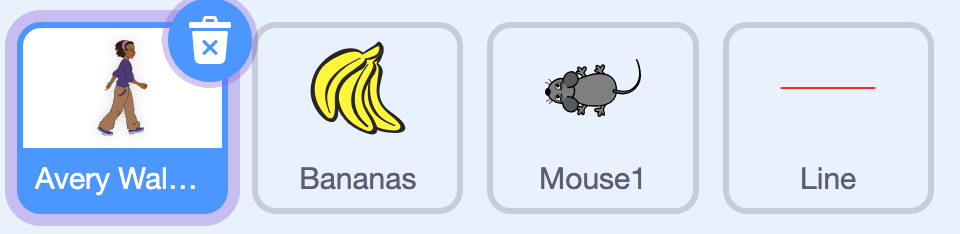

The interface lets you drag and drop sprites, set their positions, animate them, and define event-driven behaviors. I chose a walking character, a banana (which gives you points), and a mouse (which resets your score to zero if you touch it). The background was a simple platform created using a horizontal line.

The basic idea: the character keeps running forward. If you jump and pass over the banana, you gain points. If you fail and hit the mouse, your score resets.

Teaching the AI: Gesture recognition with “Teachable Machine”

The real magic was not in Scratch. It was in teaching the AI to recognize when I raised my hand.

I opened Teachable Machine, selected the "Image Project" option, and defined two classes:

Teachable machine link:

https://teachablemachine.withgoogle.com/

Jump (when I raised my left hand)

Stay (when my hand remained down)

For each class, I recorded about 100 webcam images. It took less than five minutes. Once I trained the model, I hosted it on the cloud and copied the link.

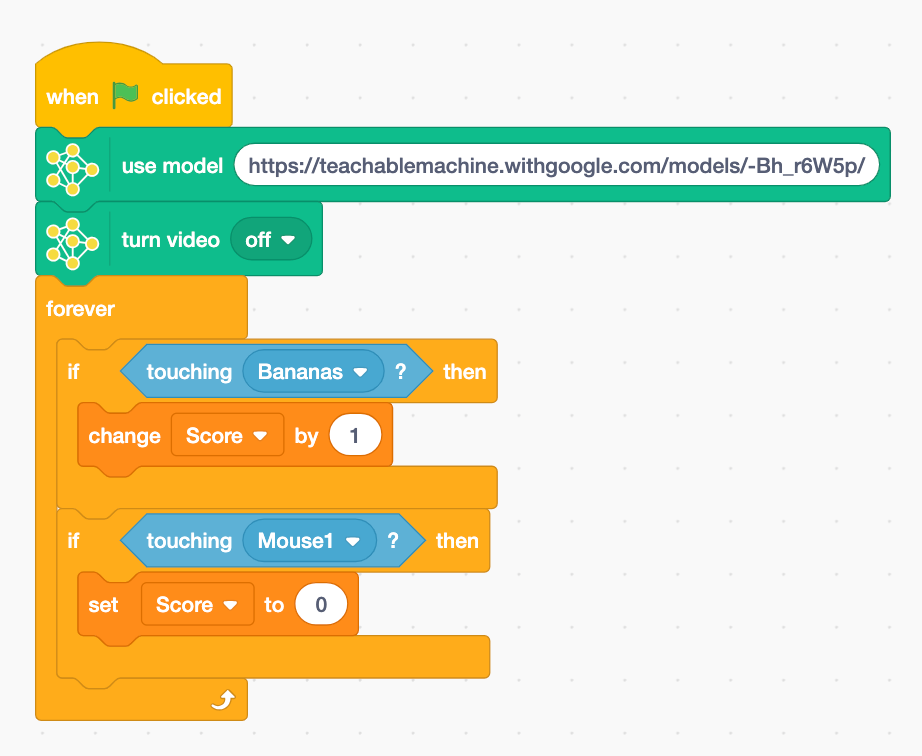

Back in Playground, I used the "Teachable Machine" extension to load the model. It immediately began reading the webcam input and could predict, with surprising accuracy, whether I was in "Jump" or "Stay" mode. I tested it live. It worked beautifully.

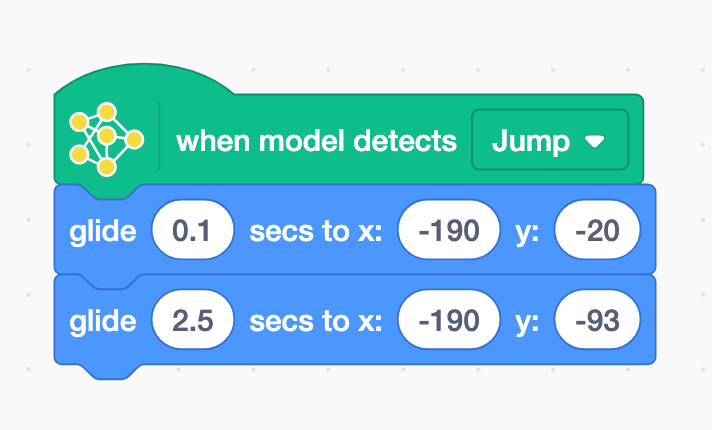

Making the Character Jump

When the model detected the "Jump" class, I told the character to glide upward on the screen, simulating a jump. I set the vertical y coordinate to rise quickly (in 0.1 seconds) and fall back slowly (in 2.5 seconds), creating a sense of gravity.

This was done using simple motion blocks:

Glide to (x, y) when "Jump" is detected

Then glide back to the original position

No physics engine. Just well-tuned parameters and gravity faked with timing.

Game logic

Now came the reward and penalty logic.

If the character touched the banana, a variable score would increase.

If the character touched the mouse, the score would reset to zero.

The challenge here was that the game kept increasing the score every frame the character was in contact with the banana, not just once per pass. This meant you could accidentally gain 9 points in one jump.

I decided to leave this in for now - it added a touch of unpredictability.

To simulate movement, I created a loop that continuously moved the banana and mouse to the left. Once they went off-screen, they were reset to the right, creating an infinite stream of approaching objects. The game never ended. You could only survive or fail.

Tuning, glitches, and workarounds

Because I was screen-recording while playing the game, my browser lagged and the jump detection was slightly delayed. Often the character would land on the mouse’s tail and the score would reset. So, I increased the jump height and slowed down the return to ground.

The game became more forgiving and easier to play.

Of course, this is just the beginning. You could randomize object spacing, add background music, build levels, or even spawn different obstacles based on your accuracy.

But the point of this exercise was not perfection. It was a quiet joy: building an intelligent game without writing a single line of code, but with enough moving parts to appreciate how logic, AI, and interaction all come together.

YouTube lecture

You can see the whole thing built step by step in the video below.

Wish to learn AI/ML live from us?

Check this out: https://vizuara.ai/live-ai-courses/