What Netflix and Swiggy Teach Us About Better ML Communication

ML fails more from miscommunication than bad modeling. Learn how companies succeed by translating models into meaningful conversations.

Table of Contents

1. Why ML Projects Fail to Deliver

2. The Netflix Analogy: Talking in ROC-AUC vs Real Buzz

3. Mood Indigo Fest: Asking the Right Questions

4. Swiggy Combos: Unguided vs Guided Planning

5. Drawing Flowcharts Like We Did in School

6. Spotify MVP: A Case of Listening Broadly, Executing Sharply

7. Subject Matter Experts: Who’s Your Champion?

8. Gantt Charts & Milestones: Meetings That Matter

9. The Drama Skit Analogy: Before You Go Live

10. Final Takeaways

1. Why ML Projects Fail to Deliver

Miscommunication is the silent killer of most ML projects. Too often, teams obsess over model performance while neglecting alignment with business goals. Clarity on why the project matters is usually missing. This leads to misaligned expectations, scope creep, and poor adoption even if the model performs well.

Key reasons projects fail:

No shared understanding of goals

Overengineering without business input

Ignoring user context

Takeaway: Success lies more in shared understanding than in superior architecture.

2. The Netflix Analogy: Talking in ROC-AUC vs Real Buzz

You might report a 0.91 ROC-AUC, but your stakeholder really wants to know:

"Which 5 shows should we promote next?"

Q: Why is this a problem?

Because we speak in metrics, while the business speaks in outcomes.

What to do instead:

Translate metrics into user value.

Replace ROC-AUC with tangible predictions: “These shows will likely trend among this audience.”

Align every performance metric with a business decision.

3. Mood Indigo Fest: Asking the Right Questions

Suppose you're tasked with building a website for Mood Indigo. You assume it needs only event schedules. But stakeholders also need sponsor visibility, ticketing, merchandise sales, and hotel booking support.

This is a classic mistake: jumping to solutions without clarifying the problem.

Ask early:

Why is this product being built?

What are the expectations across departments?

Who are the users and what are their pain points?

How will success be measured?

Only by exploring the full scope can you deliver something valuable.

Source: moodi.org

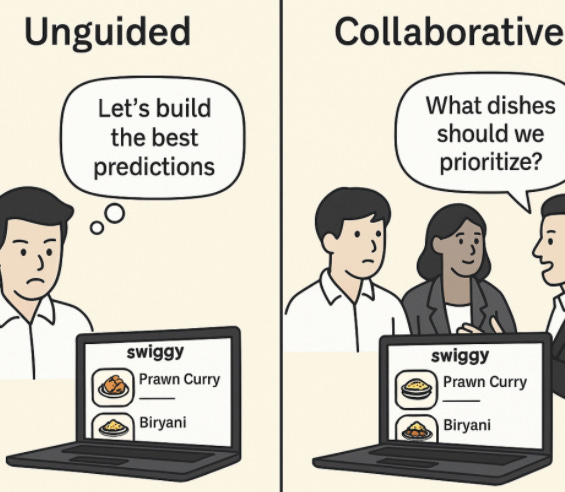

4. Swiggy Combos: Unguided vs Guided Planning

In an unguided meeting, chaos prevails, data scientists talk GPU time, designers focus on UI elements, and product managers chase deadlines. No one is aligned.

A guided planning session asks these four questions:

Why are we building this?

What will success look like?

How could this fail?

When will we deliver which part?

Result: Teams align around priorities. The Swiggy “combo recommendation” team, for example, succeeded not just because of their models, but because they structured conversations well.

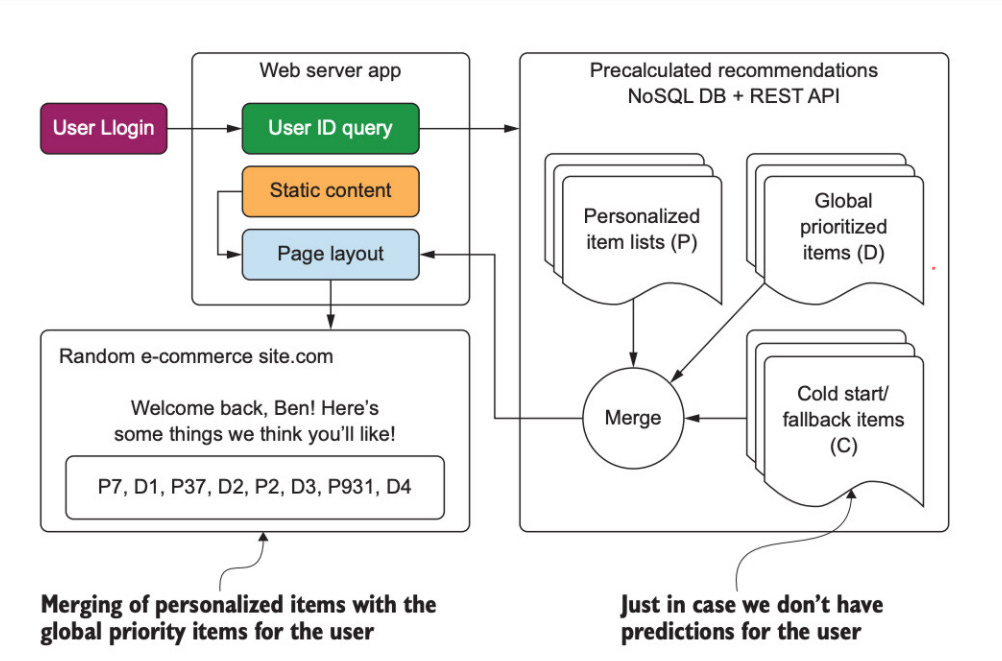

5. Drawing Flowcharts Like We Did in School

Remember the flowcharts we drew for making noodles or brushing teeth in school?

Apply the same for ML pipelines.

Benefits:

Makes complex systems understandable

Helps cross-functional teams visualize dependencies

Identifies bottlenecks before they occur

Source:- Machine Learning Engineering in Action, author:- Ben Wilson

Tip: If your ML system isn’t simple enough to be drawn, it’s probably too complex to manage.

6. Spotify MVP: A Case of Listening Broadly, Executing Sharply

Spotify’s “Discover Weekly” started with one goal: recommend 30 songs per user every week. No frills. No new artist push. No personalization layers initially.

They succeeded because they:

Focused on one clear user value

Released early, learned fast

Iterated based on user feedback

Source:- Spotify

Lesson: Don’t chase perfection in V1. Ship something specific, useful, and testable.

7. Subject Matter Experts: Who’s Your Champion?

You can’t consult the CEO every time a scope decision arises. The real guide is your Subject Matter Expert (SME)—the person who knows the domain inside out.

Role of an SME:

Validates if the model solves a real problem

Bridges the gap between business and data

Helps prioritize features and refine scope

Treat SMEs as:

Trusted advisors

Co-owners of the solution

Decision filters when trade-offs arise

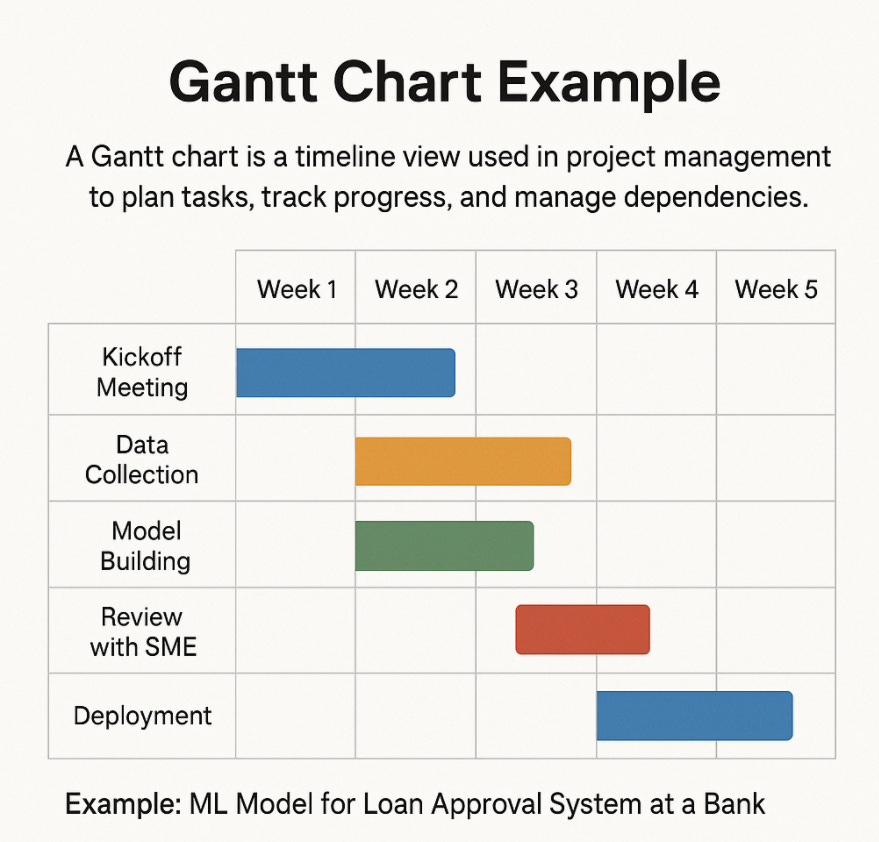

8. Gantt Charts & Milestones: Meetings That Matter

Gantt charts aren't just project management fluff—they’re clarity tools.

They help:

Map timeframes to outcomes

Schedule meaningful reviews

Catch misalignments early

Key difference from daily standups:

Milestone-based reviews are not about “what we did,” but “what we proved.”

Use milestones to:

Validate functionality

Demo real progress

Address delays with data

9. The Drama Skit Analogy: Before You Go Live

Deploying a model is like staging a play.

You don’t just hand over the script. You rehearse, test lighting, prepare backups and only then do you perform.

Similarly, your ML model needs:

User Acceptance Testing (UAT)

Launch-readiness checklists

Fallback mechanisms in production

Lesson: Don’t assume deployment is the end. It’s a final performance and you need rehearsals.

10. Final Takeaways

Here’s a distilled checklist to keep your ML projects on track:

Meet with intent: clarify before building

Define success in business terms

Communicate metrics as outcomes

Validate MVPs before scaling

Involve SMEs early and often

Use visuals to reduce misinterpretation

Treat planning as architecture, not just admin

Launch only when basic reliability is ensured

Good ML in production isn’t just about science or math. It’s about orchestration, empathy, and execution.

To learn about this more please check the video

In the next article we will dive deeper into communication and logistics in ML Project.

Interested in learning AI/ML LIVE from us?

Check this out: https://vizuara.ai/live-ai-courses/