UNet and its Family: UNet++, Residual UNet, and Attention UNet

The architecture with 118k+ citations that transformed image segmentation

When we talk about deep learning for image segmentation, one architecture repeatedly comes up as a milestone - the UNet. Originally designed for biomedical image segmentation, UNet has now become a backbone for a variety of tasks ranging from medical diagnosis to satellite imagery analysis. Over time, researchers have proposed several improvements over the original UNet, which has led to variants like UNet++, Residual UNet, and Attention UNet.

In this article, let us go step by step through the timeline, understand the motivation behind each model, and compare their use cases and contributions.

1. The Original UNet (2015)

Paper: U-Net: Convolutional Networks for Biomedical Image Segmentation by Olaf Ronneberger et al. (MICCAI 2015)

Citations: 118,000+ (as of 2025)

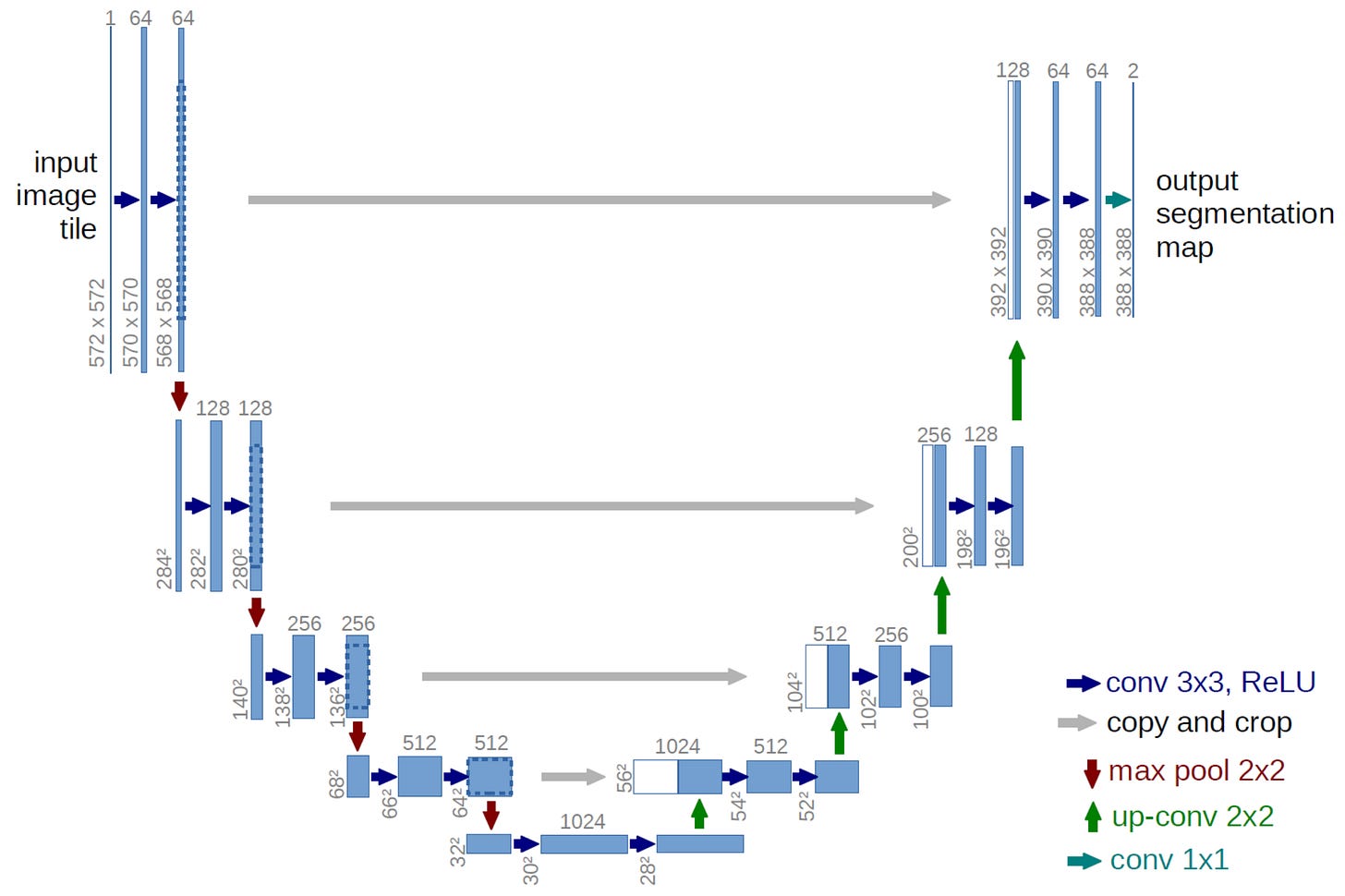

The UNet architecture was proposed in 2015 for medical image segmentation, specifically cell and tissue segmentation. Its innovation was in combining the encoder-decoder architecture with skip connections.

The encoder progressively downsamples the image, learning feature representations.

The decoder upsamples back to the original resolution, producing a segmentation map.

The skip connections link corresponding layers in the encoder and decoder, allowing fine-grained details to flow to the reconstruction step.

Use cases: Medical image segmentation (tumors, organs, cells), satellite imagery, crack detection, road segmentation in self-driving.

Why it mattered: UNet showed that you can achieve high-quality segmentation with limited training data, making it ideal for medical applications where annotated data is scarce.

2. UNet++ (2018)

Paper: UNet++: A Nested U-Net Architecture for Medical Image Segmentation by Zhou et al. (Deep AI Workshop, MICCAI 2018)

Citations: 6,000+

UNet++ was introduced as a refinement of UNet. Instead of simple skip connections, it introduced nested dense skip pathways between encoder and decoder. This meant that rather than directly copying encoder features into the decoder, they were progressively refined through intermediate convolution blocks.

Key ideas:

Nested skip connections to bridge the semantic gap between encoder and decoder.

Better gradient flow for training deeper models.

More accurate segmentation boundaries.

Use cases: Primarily medical imaging tasks where accuracy of boundaries is crucial (e.g., tumor segmentation, polyp detection).

Difference from UNet:

UNet: direct skip connections.

UNet++: redesigned skip pathways with intermediate convolution layers for feature refinement.

3. Residual UNet (2017–2018)

Paper: Residual U-Net for Automatic Brain Tumor Segmentation (Zhang et al., 2018, arXiv)

Citations: ~1,500

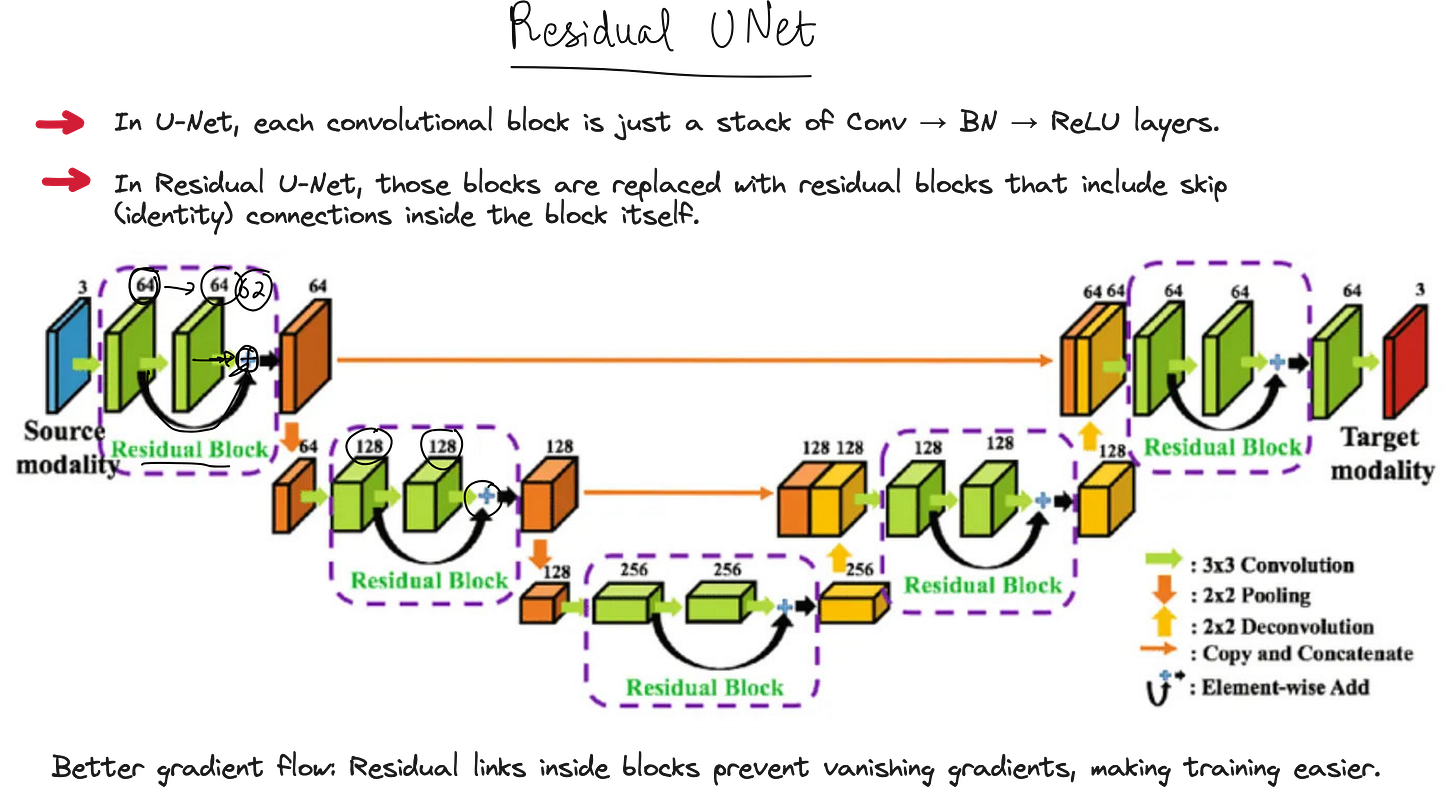

Around the same time, the deep learning community was embracing residual learning thanks to ResNet (2015). Researchers applied the same principle to UNet. The idea was simple: instead of plain convolutional layers, use residual blocks (convolution + skip within the block) in both encoder and decoder.

Why residuals help:

Alleviates the vanishing gradient problem.

Enables deeper UNet models to be trained.

Improves convergence speed and stability.

Use cases: Brain tumor segmentation, cardiac MRI analysis, lung lesion detection, and other tasks requiring deep models for subtle features.

Difference from UNet:

Standard UNet has plain convolution blocks.

Residual UNet uses residual blocks, making it more robust for deeper networks.

4. Attention UNet (2018)

Paper: Attention U-Net: Learning Where to Look for the Pancreas by Oktay et al. (MICCAI 2018)

Citations: 5,500+

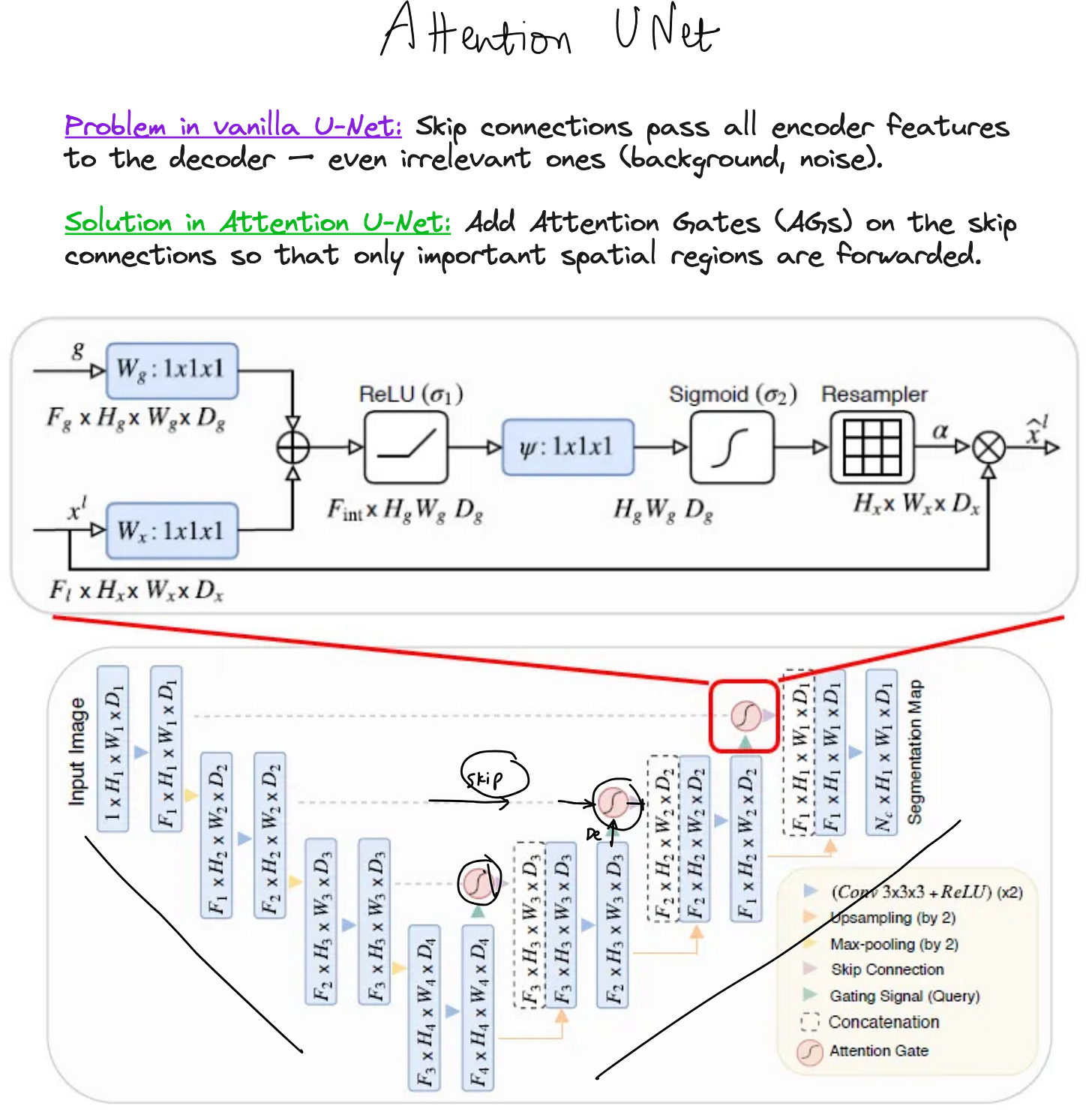

Another powerful idea added to UNet was attention mechanisms. Attention UNet introduced attention gates in the skip connections. Instead of blindly passing all encoder features to the decoder, the network learns to focus on the most relevant features for segmentation.

Key ideas:

Attention gates suppress irrelevant background and highlight important structures.

Dynamic feature selection instead of static skip connections.

Improves performance on organs or structures that occupy small parts of the image.

Use cases: Medical imaging tasks where target structures are small or difficult to distinguish (e.g., pancreas segmentation, liver lesions, fetal ultrasound).

Difference from UNet:

UNet: passes all encoder features via skip connections.

Attention UNet: passes selectively filtered features using attention gates.

Each AG takes two inputs:

Encoder feature map (xˡ) = carries spatial details.

Decoder gating signal (g) = carries context of what is currently important.

Both inputs go through 1×1 convolutions → projected into a common intermediate space.

-> Added and passed through ReLU (σ₁).

-> Another 1×1 conv + Sigmoid (σ₂) produces an attention coefficient α (0–1).

-> This α acts as a mask that multiplies the encoder features:

If α ≈ 1 → features are important → kept.

If α ≈ 0 → features are irrelevant → suppressed.

-> The resampled weighted features are then passed along the skip connection.

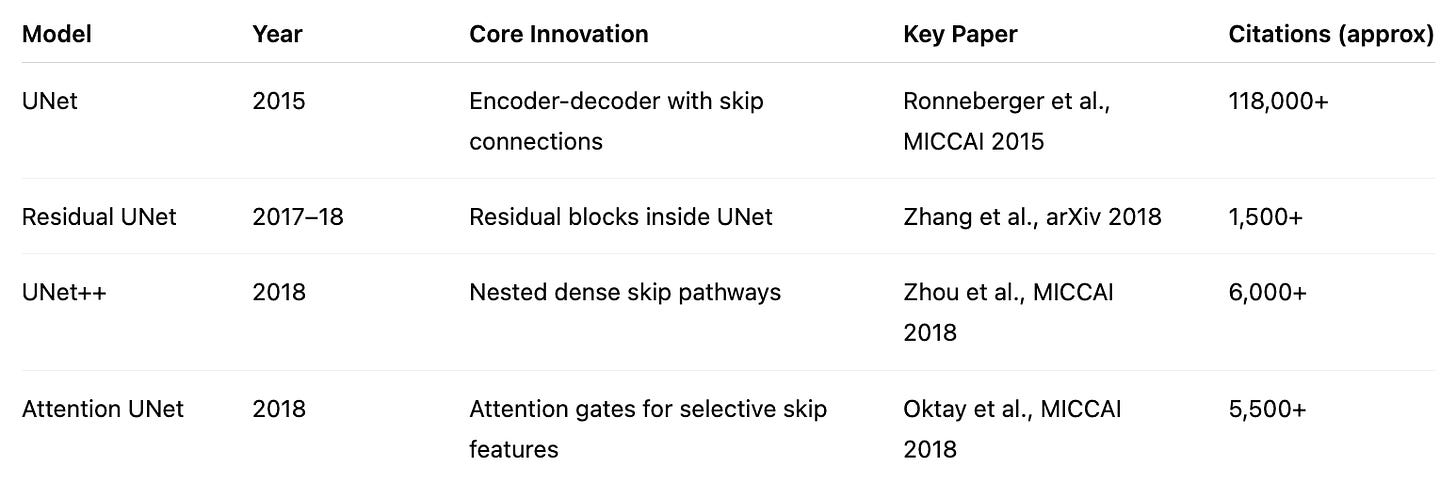

Timeline at a Glance

Comparing the Variants

UNet: Simple, effective, works well with limited data.

Residual UNet: Better for deep models, more stable training.

UNet++: Improved feature refinement, excellent for precise boundary segmentation.

Attention UNet: Adds intelligence to feature selection, ideal for small or complex targets.

Where are these used today?

Medical imaging remains the most dominant domain - segmentation of brain tumors, liver, lungs, pancreas, cells, and polyps.

Remote sensing - segmentation of buildings, roads, vegetation in aerial or satellite imagery.

Industrial inspection - detecting cracks, defects, and anomalies.

Autonomous driving - road, lane, and obstacle segmentation.

Interestingly, UNet has also found its way into Generative AI models like Stable Diffusion (2022), where UNet acts as the core denoising network in the diffusion process, showing how an architecture born in biomedical imaging has become central to modern generative models.

Use cases

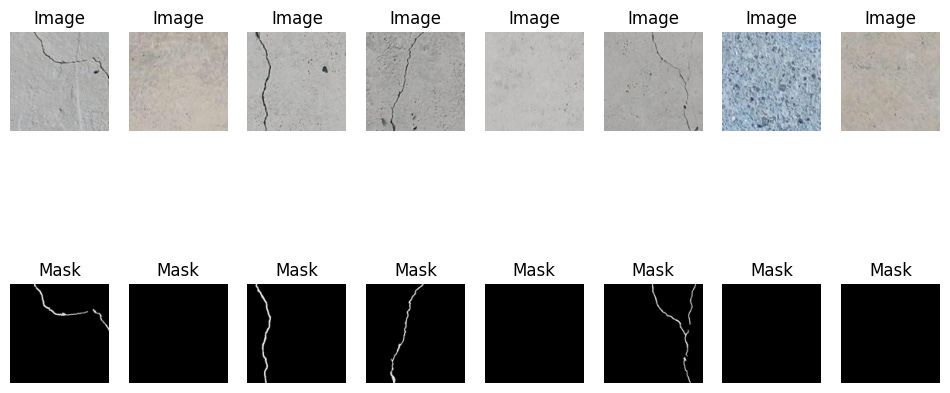

Cement crack segmentation

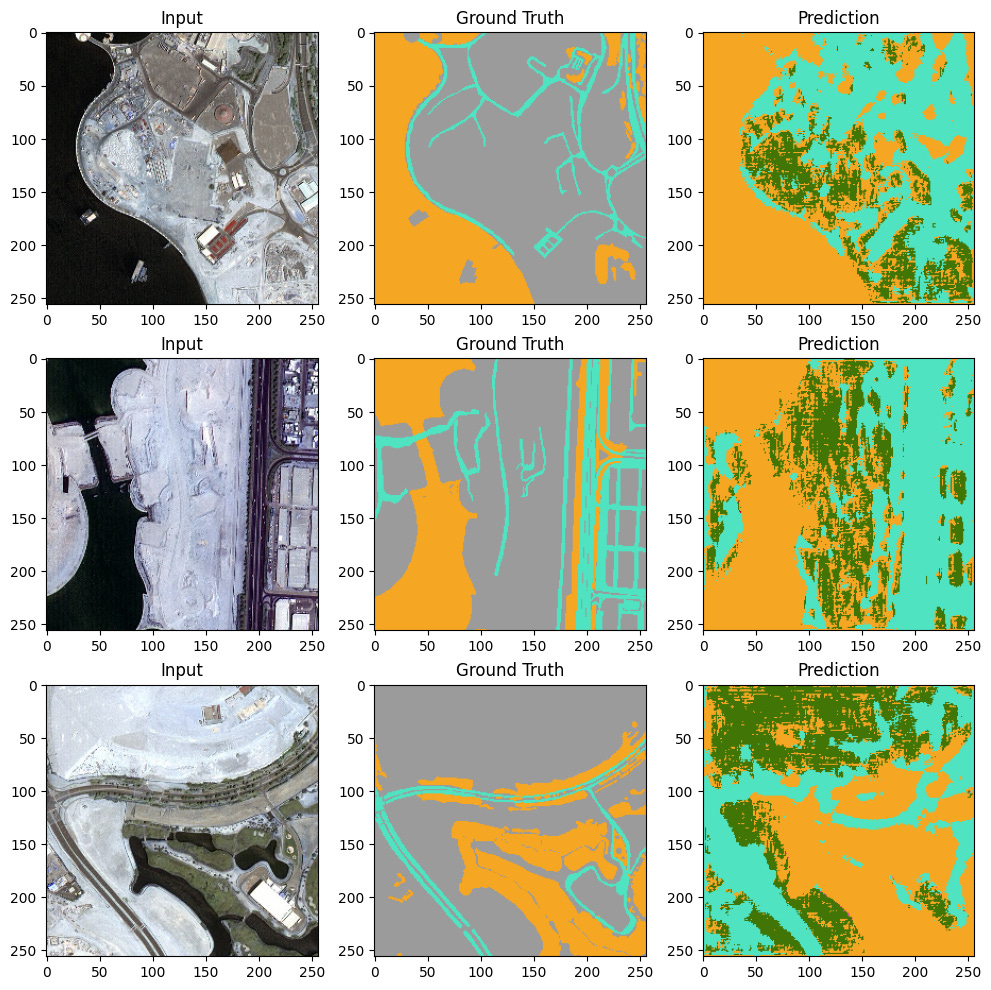

Aerial image segmentation

Closing Thoughts

The UNet family shows how a single architectural idea can evolve and adapt to different needs. The original UNet opened the door for efficient segmentation, and its successors added depth, refinement, and intelligence. Depending on the application, one might choose UNet for simplicity, Residual UNet for deeper models, UNet++ for precise boundaries, or Attention UNet for selective feature learning.

What is remarkable is that all these innovations are less than a decade old, and they continue to influence both applied domains like healthcare and emerging areas like generative AI.

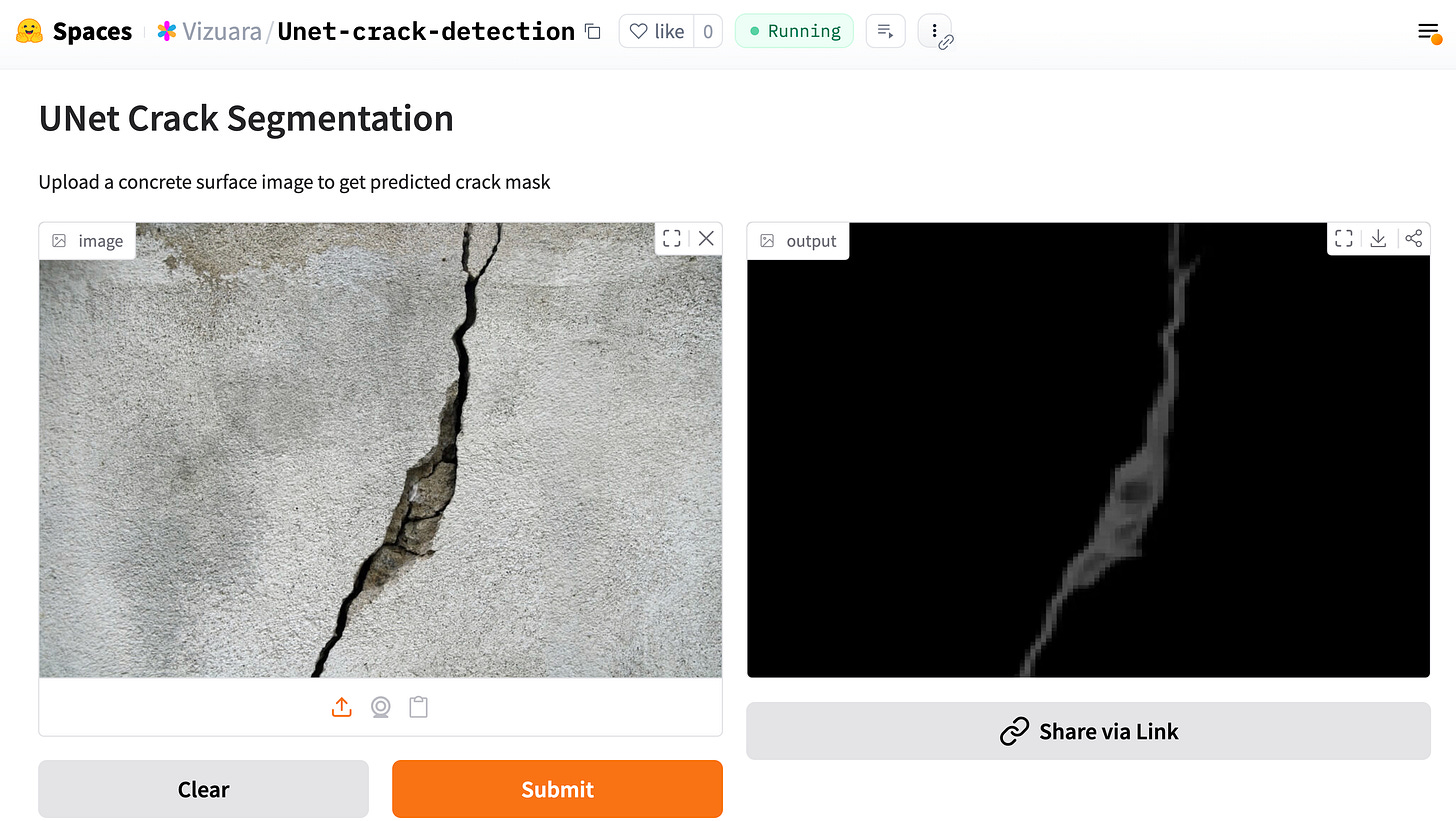

HuggingFace deployment

https://huggingface.co/spaces/Vizuara/Unet-crack-detection