Neural Architecture Search (NAS)

The story of how Google Brain used 500 GPUs to discover one of the most fascinating architectures in deep learning

There was a time when designing neural networks was an art.

Researchers would sit down, sketch out layer configurations on whiteboards, discuss whether to use 3x3 or 5x5 convolutions, whether to stack or branch, how to reduce overfitting, and how to ensure gradients flowed smoothly. And over the years, we saw the rise of architectures like VGG, Inception, ResNet, and MobileNet - each handcrafted, each pushing the boundary of performance one block at a time.

But then something unusual happened.

Google Brain asked a very strange question.

Can a neural network design a better neural network?

And that question led to the birth of NASNet, short for Neural Architecture Search Network - a model discovered, not designed.

Why design a CNN when you can discover it automatically?

Every neural network we have seen so far-whether it was AlexNet, VGG, Inception, ResNet, or EfficientNet-was the product of human intuition, experience, and a lot of trial and error.

Designing these models was not easy. Researchers had to guess what combination of layers and operations would work best. Sometimes they got it right. Often they did not. And even when they did, it took months of experimentation to arrive at something stable.

Google Brain wondered-what if this process could be automated?

What if a neural network could search through the vast space of possible CNN architectures and discover the one that performs best?

This was the premise behind Neural Architecture Search (NAS), and the model that came out of it was NASNet.

How NAS Works: RNN + Reinforcement Learning = Architecture Search

Here is how it works.

An RNN controller is used to generate architectural "blueprints"-sequences that describe how to connect different operations like convolutions, pooling, or identity mappings.

Each blueprint defines a cell, a reusable building block.

These cells are of two types:

Normal cells preserve spatial dimensions

Reduction cells reduce spatial dimensions, similar to max pooling

These cells are stacked repeatedly to form a full CNN.

That CNN is trained and evaluated on a proxy dataset like CIFAR-10.

The validation accuracy of that CNN is used as a reward signal.

The RNN updates itself using reinforcement learning so that in the next iteration, it samples a better blueprint.

This process repeats thousands of times. It is painfully expensive. The original NASNet paper reports using 500 GPUs for four days just to search the architecture space.

But in the end, it worked.

The RNN discovered a set of cells that performed exceptionally well, and those cells were then used to construct NASNet-A (Large), a model that achieved state-of-the-art results on ImageNet and other benchmarks.

The Lego analogy

There is a simple way to think about this.

Imagine giving a child a bucket of Lego pieces and asking them to build a castle. Each piece can be a convolution, a skip connection, a pooling layer. If the child builds a stable, beautiful castle, you reward them. If the structure collapses, they get no reward.

Over time, the child learns how to build better castles.

In NAS, the child is the RNN controller, the Lego pieces are the operations, the castle is the CNN, and the reward is the validation accuracy on a dataset like CIFAR-10.

This process is not magic. It is slow, computationally heavy, and quite brutal. But it works.

Why NASNet is different from other CNNs

Unlike ResNet or MobileNet, NASNet is not modular in the traditional sense.

Yes, it repeats normal and reduction cells like modules, but those cells are discovered rather than designed. There is no fire module, no depthwise separable convolution that someone thought of over coffee. These cells are the result of brute-force optimization over architecture space, guided by reward signals.

This also means that explaining NASNet is harder.

In VGG, we can say it stacks 3x3 convolutions.

In ResNet, we can say it uses skip connections to solve vanishing gradients.

In NASNet, we say, “The search algorithm found that a mix of 1x1, 3x3, and 5x5 convolutions, identity mappings, and pooling, when arranged in a specific way, gave the best result.”

We are left interpreting the architecture after it is found.

That is what makes it both fascinating and frustrating.

Implementing NASNet on the five-flowers dataset

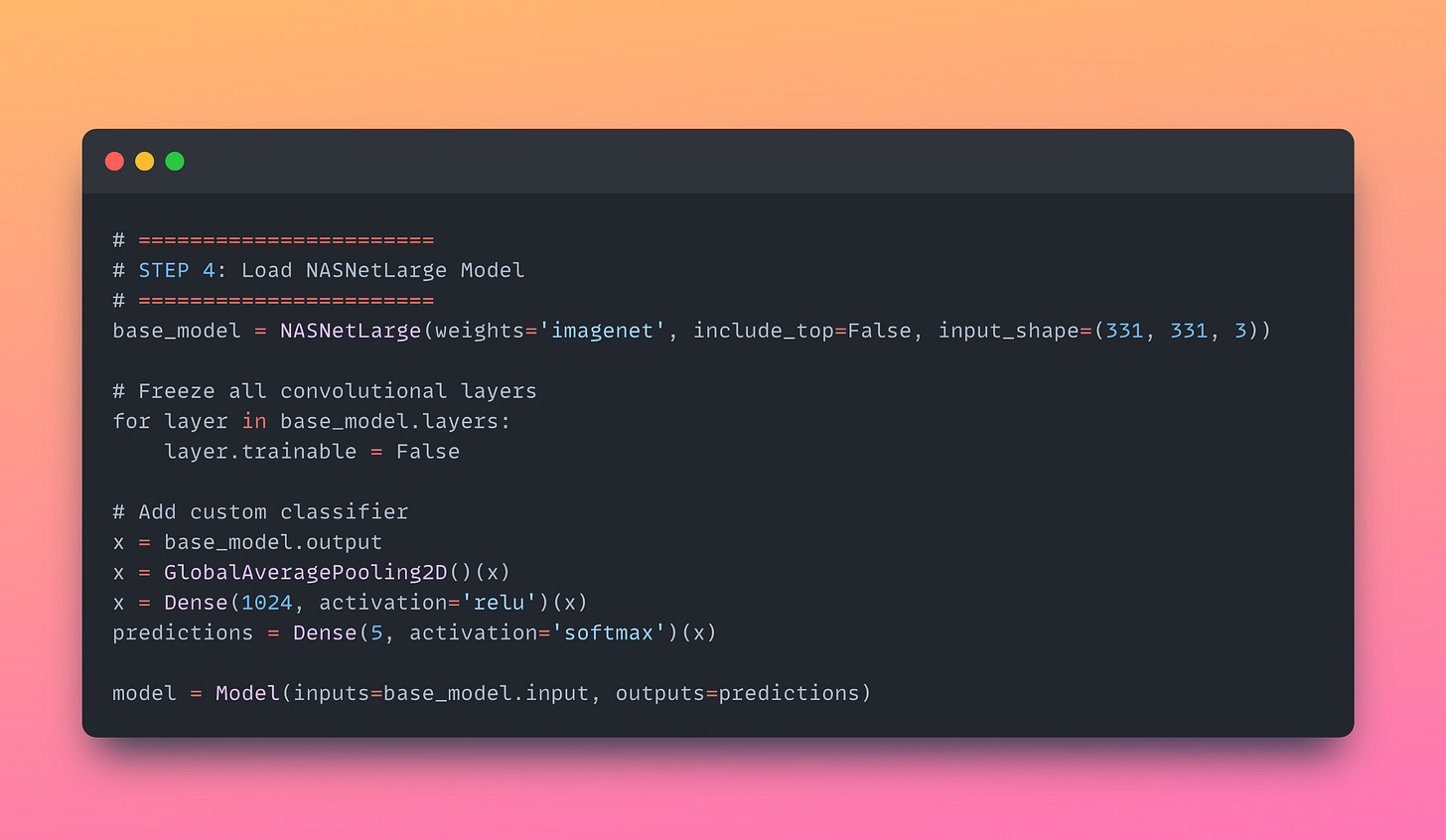

In this course, we implemented NASNet Large on our Five-Flowers dataset using Keras, since PyTorch does not provide a pre-trained NASNet model.

Some quick facts:

Input image size: 331x331

Total parameters: ~88 million

Model size: ~343 MB

Pre-trained on ImageNet

Only the final classification head was retrained

After just two epochs, we achieved:

87% training accuracy

85% validation accuracy

Training was slow-painfully slow. Three epochs took more than three hours on CPU. NASNet is heavy, and unless you have a good GPU or TPU, it is not recommended for quick experiments.

Comparison with Other Models

From the perspective of training time, MobileNet was the fastest.

From the perspective of accuracy, ResNet50 remains our favorite so far.

From the perspective of innovation, NASNet takes the cake-it tried to automate what humans had done for years.

Code [the relevant parts]

Final thoughts

Neural Architecture Search is not the end of CNN design. It is a new beginning.

NASNet showed us that design could be outsourced to machines. It proved that neural networks, when guided by reinforcement learning and a good search space, could invent architectural components that humans may never have thought of.

But it also reminded us of the limits.

Training NASNet was expensive. Explaining its architecture is hard. And unless you work at a research lab with 500 GPUs, you will not be running the NAS algorithm yourself.

Still, it was a major step forward. One that led to newer, better architectures like EfficientNet, which borrows heavily from the NAS philosophy while improving efficiency through compound scaling.

As for us, we have now studied over a dozen CNN architectures in this course, and NASNet marks the close of that chapter. Up next, we move beyond classification-into segmentation, object detection, and eventually, Vision Transformers.

If you are still here, still learning, still coding-take a moment to be proud of yourself. You have made it through 16 lectures on CNNs, from scratch, with care, with patience, and with deep curiosity.

And that is exactly the mindset that NASNet was born from.

YouTube lecture

Here is the full code file: https://colab.research.google.com/drive/1CzBWBiwyA5WFMCZvtstC6HKryZz545pz?usp=sharing

Interested in learning AI/ML live from us?

Check this Out: https://vizuara.ai/live-ai-courses