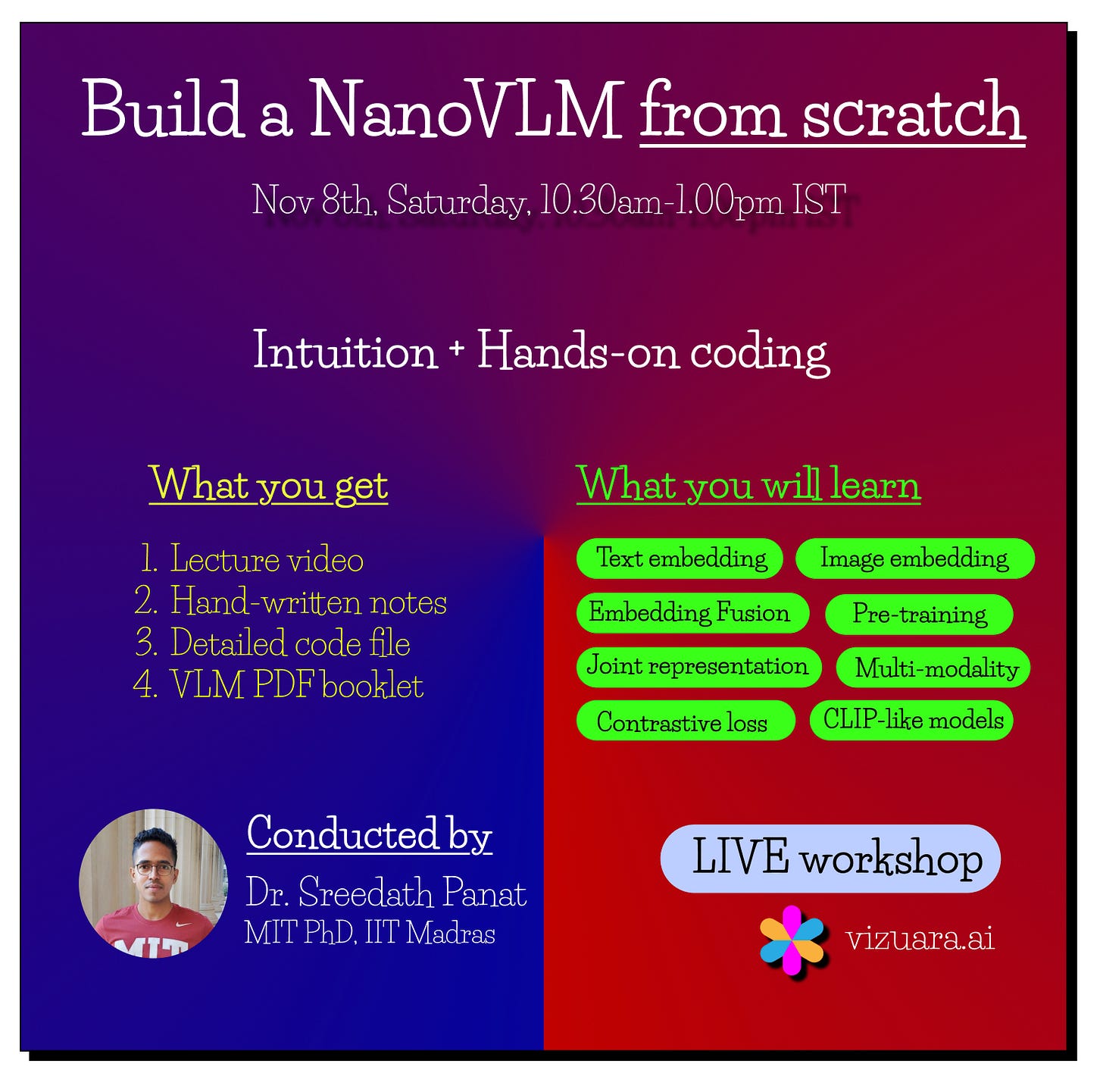

LIVE workshop: Build a NanoVLM from scratch

Happening on Saturday, November 8th

How does a VLM work?

A VLM has two separate encoders:

1) Image Encoder – usually a Vision Transformer (ViT) or a CNN that converts an image into a vector.

2) Text Encoder – a Transformer-based model (like BERT or GPT) that converts a caption, question, or sentence into a vector.

The goal is to make both these vectors live in the same embedding space.

For example, the text “a dog running” and an image of a dog running should end up close to each other in this space.

The text “a red apple” and a picture of an apple should also align.

Mismatched pairs should be far apart.

This is trained using contrastive learning, just like in CLIP by OpenAI.

Once text and images share a common space, the model can:

-> Generate a caption for any image

-> Answer questions about an image (VQA)

-> Retrieve the correct image when you type a sentence

-> Retrieve the correct caption when you upload an image

-> Act or plan in robotics and self-driving using both vision and language (VLA, VLP)

The model is not just looking at pixels or words anymore. It is aligning the meaning across both.

What you build in a NanoVLM

-> A small text encoder

-> A CNN or ViT-based image encoder

-> A joint embedding space

-> Contrastive loss to train both encoders together

-> Visualization of how text and image alignment improves during training

I am conducting a live hands-on workshop on

“Build a Nano Vision Language Model (VLM) from Scratch” at Vizuara.

Nov 8th, Saturday

10.30am – 1.00pm IST

Link to register: https://vizuara.ai/courses/build-a-nanovlm-from-scratch/