It Works on My Machine?! Not Anymore — Docker for ML Deployment

Deployment in ML fails when environments drift. Docker fixes this by packaging everything into containers that run identically everywhere.

Table of Contents

Introduction

What is Docker?

Why We Needed Docker

Docker vs Virtual Machines

Core Components of Docker

Docker Architecture for ML

Real-Life Analogies

Why ML Engineers Should Care

Docker Implementation

Key Takeaways

1) A Practical Introduction to Docker for Machine Learning Deployment

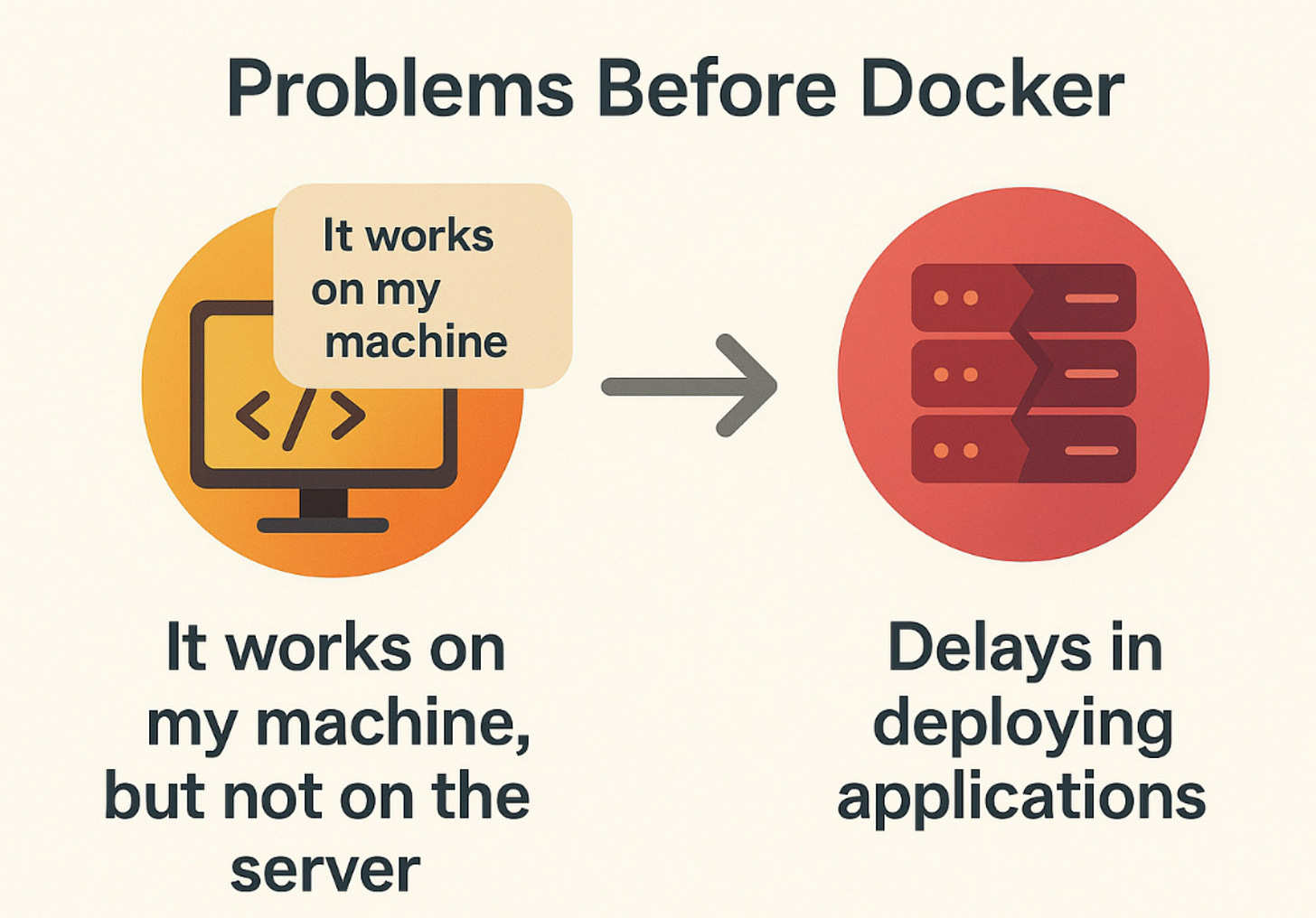

When machine learning projects move from research notebooks to production systems, chaos often follows. Dependencies clash, environments differ, and suddenly the infamous line appears:

“It works on my machine, but not on the server.”

This is where Docker changes the game. Docker brings structure, reproducibility, and portability to machine learning workflows, exactly what’s needed in modern production pipelines.

In this article, we’ll unpack Docker from first principles, explain its core components, compare it with virtual machines, and show how it addresses real-world pain points in ML deployment.

2) What is Docker?

At a low level, Docker is a process isolation framework. Instead of running your application directly on the host system, Docker creates a controlled environment where the application thinks it has its own dedicated machine. This environment includes only the binaries, libraries, and configurations that the application requires.

What makes Docker unique is its layered image system. Each instruction in a Dockerfile creates a new layer (base OS → Python runtime → ML libraries → application code). These layers are cached and reused, which makes builds faster and images smaller. When you update your model code, only the top layer changes, while the lower layers (like CUDA or PyTorch) remain untouched.

For ML engineers, this translates to:

Predictable runtimes: No version drift between dev and production.

Efficient iteration: Update just the model code layer, not the entire environment.

Scalability: Containers can be replicated quickly across multiple GPUs or nodes.

Rather than thinking of Docker as a “box,” think of it as a blueprint-driven snapshot of your environment, one that can be executed reliably anywhere.

3) Why We Needed Docker

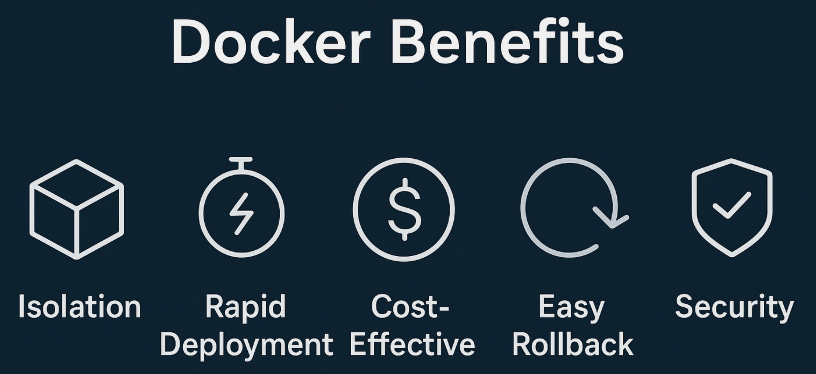

The Problems Before Docker

Code would often break when moving between environments laptop, dev server, or cloud due to differences in OS, libraries, or configurations.

Provisioning full servers for each app was slow, costly, and inefficient.

Debugging deployment issues consumed more time than actual feature development.

The Docker Solution

Isolation & Consistency: Each app runs inside its own container, packaged with all dependencies.

Rapid Deployment: Containers boot in seconds, no need for spinning up entire operating systems.

Cost-Efficient: Multiple containers share the host OS kernel, saving memory & CPU.

Version Control & Rollbacks: Docker images are versioned reverting to a stable build is just one command away.

Security: Containers limit what processes can access, reducing attack surface.

Docker didn’t just solve a technical problem it transformed how teams develop, test, and ship applications.

4) 🖥️ Docker vs Virtual Machines

One common question: “How is Docker different from Virtual Machines (VMs)?”

1. Operating System Usage

Docker: Shares the host OS kernel across multiple containers.

VMs: Each VM needs a full guest OS, independent of the host.

2. Resource Consumption

Docker: Lightweight, minimal memory footprint, starts in seconds.

VMs: Heavy; each instance consumes CPU, RAM, and storage for its OS.

3. Performance

Docker: Near-native speed, optimized for rapid startup and scaling.

VMs: Slower boot times, higher overhead due to full OS virtualization.

4. Portability

Docker: Easy to move across systems; images are compact and shareable.

VMs: Large, bulky VM images; harder to migrate and replicate.

5. Application Setup

Docker: Bundles app + dependencies inside one container image.

VMs: Apps rely on the specific guest OS configuration.

6. Security

Docker: Provides isolation but shares the host kernel.

VMs: Stronger isolation since each runs a full OS boundary.

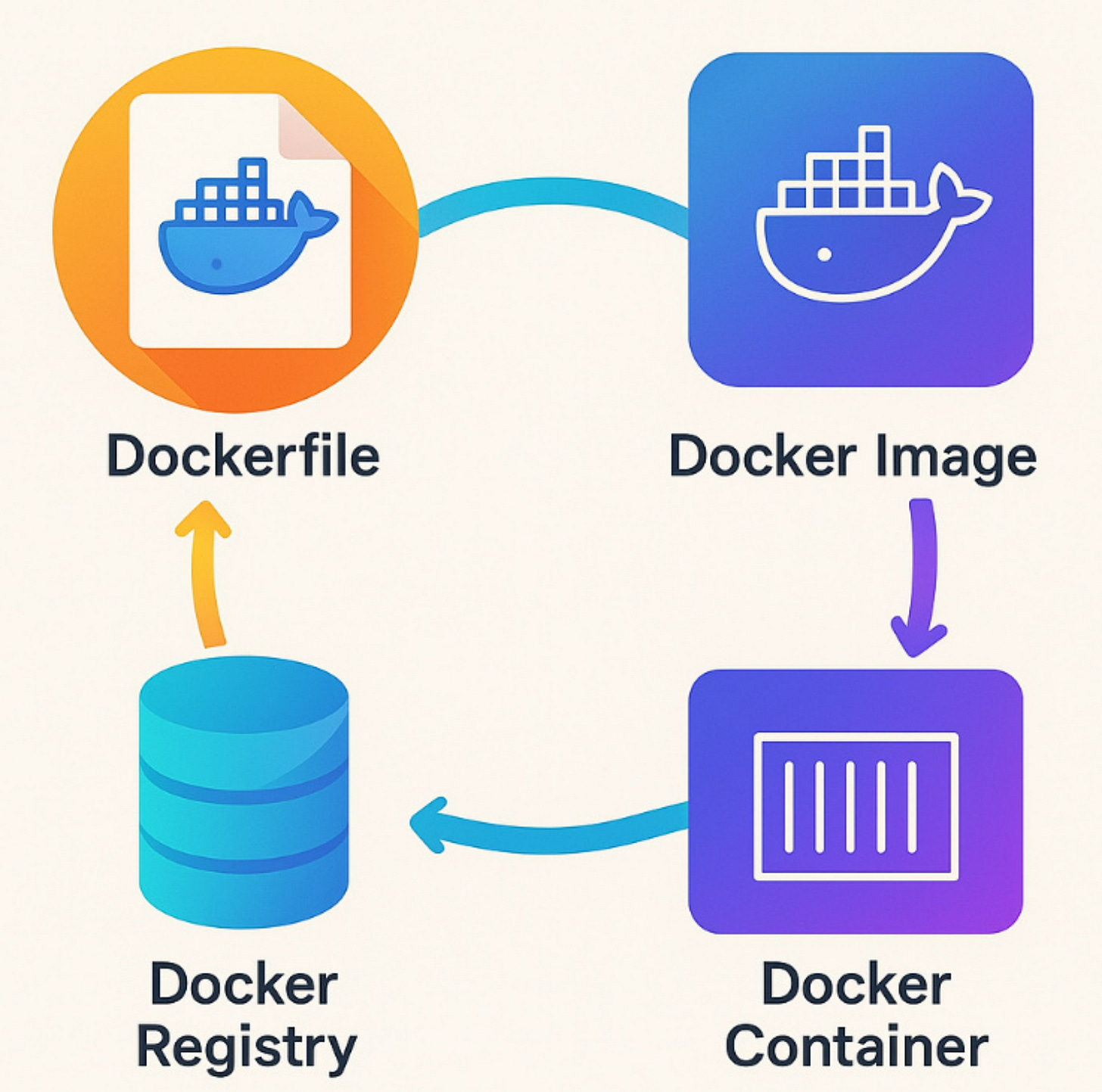

5) The Key Components of Docker

Understanding Docker means knowing its building blocks:

Docker Client (CLI): The tool you use (

docker run,docker build, etc.) to interact with Docker.Docker Daemon (dockerd): The background process that builds, runs, and manages containers.

Docker Image: A blueprint — like a recipe card — containing everything needed for an app (OS libraries, code, dependencies).

Docker Container: A running instance of an image — like cooking from the recipe card. Lightweight, isolated, and disposable.

Dockerfile: A text file with step-by-step instructions to build an image. (e.g., “install Python, copy ML model, set entrypoint”).

Docker Registry (e.g., Docker Hub): The online “library” where images are stored and shared.

Docker Compose: Orchestration tool for multi-container apps (e.g., running an ML API + database together).

Networking & Storage: Mechanisms for containers to talk to each other and persist data across restarts.

6) Docker Architecture (Simplified)

Here’s a typical Docker workflow in ML projects:

Write a Dockerfile – Define base image (e.g., Python 3.10), install dependencies, copy code.

Build an Image – Run

docker build -t mymodel:v1 .to create an image.Run a Container – Launch the image in an isolated environment using

docker run.Push to Registry – Share your image with teammates or production servers via Docker Hub or a private registry.

Deploy – Run containers on cloud services (AWS ECS, GCP Cloud Run, or Kubernetes).

This workflow makes deployment as simple as running:

docker run mymodel:v1

No more dependency problem.

7) 🍱 Real-Life Analogies

8) Why ML Engineers Should Care

For machine learning, Docker is not optional , it’s a necessity. Here’s why:

ML models rely on specific library versions (TensorFlow 2.13, CUDA 11.7, etc.). Docker freezes that environment.

Deployment becomes push-button simple same image runs on laptop, server, or cloud.

Reproducibility: Experiments can be shared and reproduced years later.

Collaboration: Teams can run the exact same environment without conflicts.

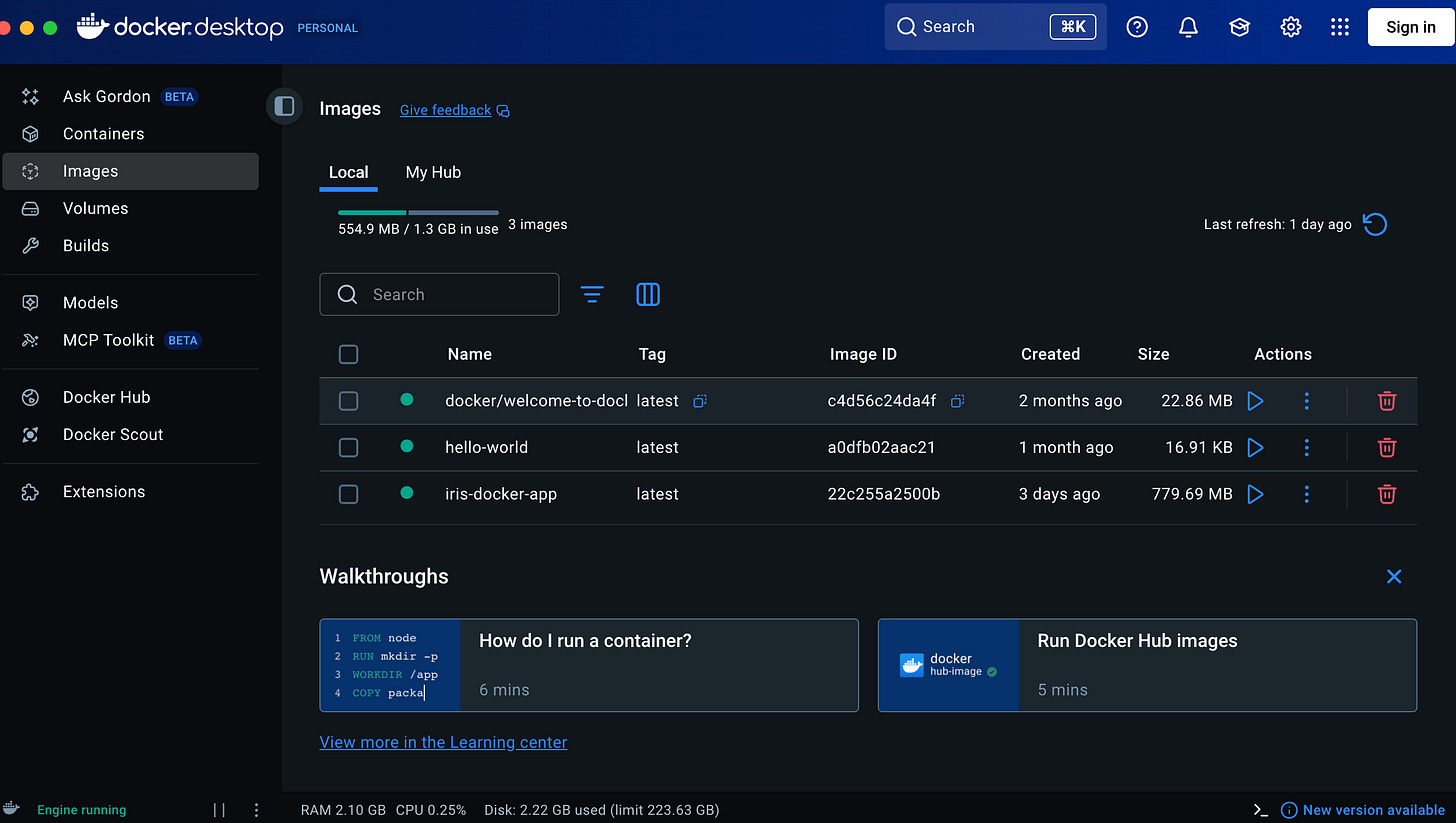

9) Docker Implementation

I demonstrate how Docker can simplify and standardize machine learning workflows using the classic Iris dataset as an example on Vizuara’s Youtube channel. I first show the model running locally on my machine and then containerize the same environment using Docker. By comparing the two setups, it becomes clear how Docker ensures consistency across different systems, eliminates dependency issues, and allows the exact same code to run reliably anywhere. This practical illustration highlights the key benefits of containerization in real-world ML projects, showing that Docker is not just a theoretical tool but a highly effective solution for reproducible and portable workflows.

Video Link is attached below in the article. Feel Free to watch for better understanding

10) Key Takeaways

Docker is an open-source containerization platform that ensures apps run identically everywhere.

It solves the “works on my machine” problem by packaging dependencies and environment configs.

Compared to VMs, Docker is lightweight, faster, and more portable.

The ecosystem (Dockerfile, Images, Containers, Registry, Compose) makes ML deployment modular and reproducible.

For ML engineers, Docker is the bridge from experimentation → production.

To learn more about Docker please watch video: