Introduction to Scikit-learn in Python

Why mastering Scikit-learn is non-negotiable in ML

There is a myth in machine learning that everything needs to start with neural networks, GPUs, and large datasets. The truth is - the best practitioners start with the basics. And there is no better foundation than Scikit-learn.

This article is not a tutorial. It is a walkthrough of thinking like an ML engineer.

We take a simple dataset - the classic Iris dataset - and build a complete machine learning pipeline using Scikit-learn. Along the way, we learn to clean data, visualize it, split it, scale it, model it, evaluate it, and interpret it.

All of this with fewer than 50 lines of Python.

So what is ML in simple terms?

Machine Learning is the field of study that gives computers the ability to learn without being explicitly programmed.

ML workflow

The problem with code-first ML learning

Most learners begin their ML journey by throwing code at the problem.

They download a dataset, import a model, call .fit(), and hope for the best.

The problem? No intuition. No understanding of why models work. And no skills to debug or improve when they don’t.

Scikit-learn forces you to slow down. To understand. To think.

This article is for those who want to build machine learning systems, not just submit notebooks on Kaggle.

So, what is Scikit-learn?

Scikit-learn is Python’s most-used ML library for traditional models.

Built on NumPy, SciPy, and Matplotlib

Provides consistent API for:

Models (fit, predict)

Preprocessing

Evaluation

Pipelines

First, a note on why visuals matter

You cannot convince a client, professor, or investor with raw metrics. You win them with visuals.

Whether it is pair plots, confusion matrices, or heatmaps, good visualizations help you build intuition about the data and explain results without jargon.

If your model is a black box, visuals are the flashlight.

What is the Iris dataset?

The Iris dataset contains 150 records of three types of iris flowers - Setosa, Versicolor, and Virginica. For each flower, you are given four measurements: sepal length, sepal width, petal length, and petal width.

Your task: Predict the flower type given these four features.

It is the “hello world” of classification problems - and a perfect test bed for mastering Scikit-learn.

Step-by-Step: Building the ML pipeline

We build an end-to-end ML pipeline from scratch using Scikit-learn. Every step mirrors what you would do in a real-world ML project.

1. Load the Data

from sklearn.datasets import load_iris iris = load_iris()Simple. Clean. Transparent.

2. Convert to a Pandas DataFrame

import pandas as pd X = pd.DataFrame(iris.data, columns=iris.feature_names) y = iris.targetX contains your features. y contains your labels. Now, you can start exploring.

Visual Exploration - The Foundation of ML

Before you model, you need to understand.

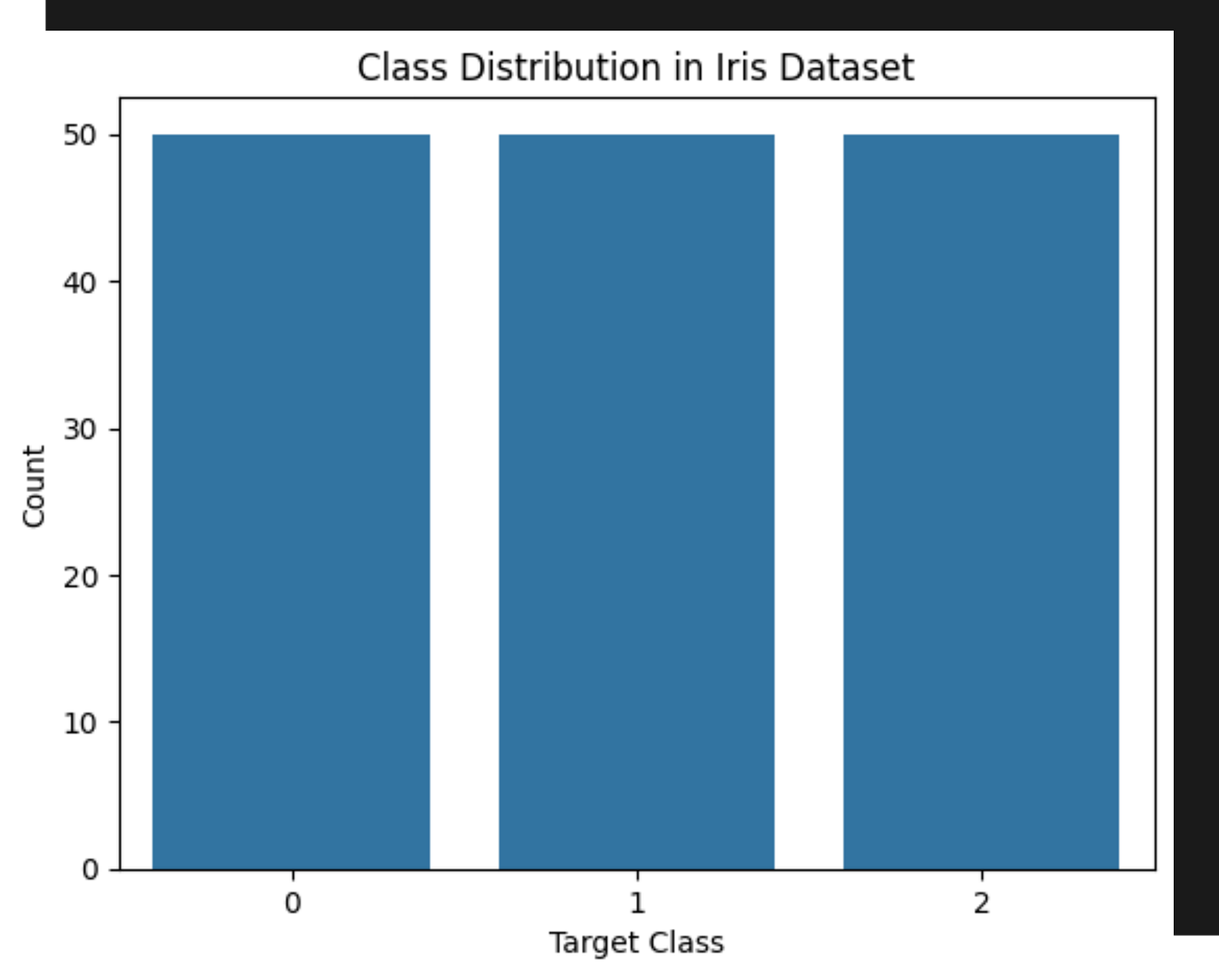

You start with class distribution:

import seaborn as sns

sns.countplot(x=iris.target)Then move to pair plots to see relationships between features:

sns.pairplot(df, hue="target")You will quickly realize: Petal length and petal width almost perfectly separate class 0 (Setosa) from the others.

Then you use a correlation heatmap to quantify this:

sns.heatmap(df.corr(), annot=True)You find that petal length has a 0.95 correlation with the target. This is not a feature - it is a signal.

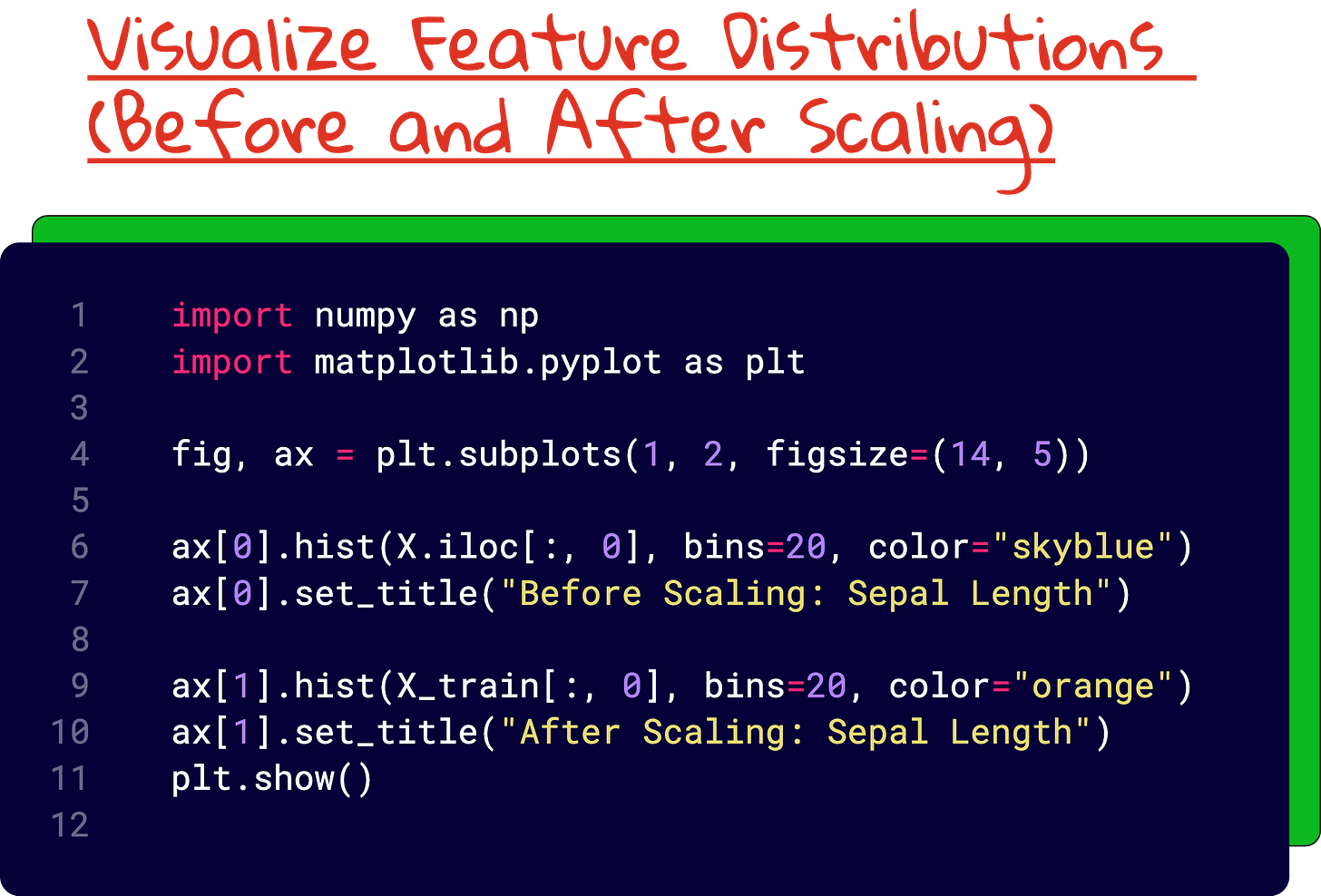

Preprocessing: Clean Before You Model

No missing values here. But real-world datasets are not so kind.

Still, you need to split the data:

from sklearn.model_selection import train_test_split X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=42)And scale it:

from sklearn.preprocessing import StandardScaler scaler = StandardScaler() X_train_scaled = scaler.fit_transform(X_train) X_test_scaled = scaler.transform(X_test)Without scaling, your features will mislead the model. With scaling, you give each feature a fair shot.

Modeling: KNN and Decision Trees

Now the fun begins.

K-Nearest Neighbors (KNN)

Think of KNN like this:

You moved into a new neighborhood and you don’t know which cricket team you should join. So you look around at the 5 people closest to you.

If 3 of them play for Team A, and 2 for Team B, you join Team A.

Why? Because most of your neighbors are in Team A.

That’s exactly what KNN does.

from sklearn.neighbors import KNeighborsClassifier knn = KNeighborsClassifier(n_neighbors=3) knn.fit(X_train_scaled, y_train)KNN is intuitive. A new point is classified based on its neighbors. You set k = 3 and let the algorithm vote.

Decision Tree

Simple Example: Should You Go Outside?

You’re trying to decide whether to go outside or stay home.

You ask yourself a few yes/no questions in order:

Is it raining?

Yes → ❌ Stay home

No → move to the next question

Do you have work to do?

Yes → ❌ Stay home

No → ✅ Go outside

This is exactly what a Decision Tree does:

It splits the decision into smaller questions

Each question is a node

Each answer leads to another branch

At the end, you get a final decision

from sklearn.tree import DecisionTreeClassifier tree = DecisionTreeClassifier(max_depth=3) tree.fit(X_train_scaled, y_train)Decision trees split the dataset recursively based on feature thresholds. Simple. Powerful. Transparent.

Evaluation: Accuracy is Not Enough

A model that predicts everything as class 0 might still get 33 percent accuracy.

You need more.

from sklearn.metrics import confusion_matrix, classification_report print(confusion_matrix(y_test, knn.predict(X_test_scaled))) print(classification_report(y_test, knn.predict(X_test_scaled)))For KNN with k = 3 and Decision Tree with max_depth = 3, you get 100 percent accuracy on the test set.

Change k to 1 or reduce the tree depth to 1, and performance drops.

This is modeling as experimentation - not trial and error.

The takeaway

Scikit-learn is not flashy.

It will not run GPT-4.

But it will teach you how to build ML systems end-to-end.

And that is where most people fail.

If you want to go beyond superficial ML knowledge, learn Scikit-learn. Use the Iris dataset. Build visual intuition. Write cleaner code. And most importantly, understand what your model is actually doing.

Do not just fit. Think.

Interested in AI/ML foundations?

Check this: https://vizuara.ai/self-paced-courses

Lecture video (YouTube)

Colab code

Link: https://colab.research.google.com/drive/1jYZP_x3s_uUtIAt8jEhTg_wvrVHxsfm5?usp=sharing