How CRED Built a Cred-ible ML System

In production ML, your communication pipeline is as critical as your data pipeline

Table of Contents

Introduction

Hypothetical Case Study: Zomato’s ML-Powered Dish Discovery

Experimental Update Meetings

SME & Prototype Reviews

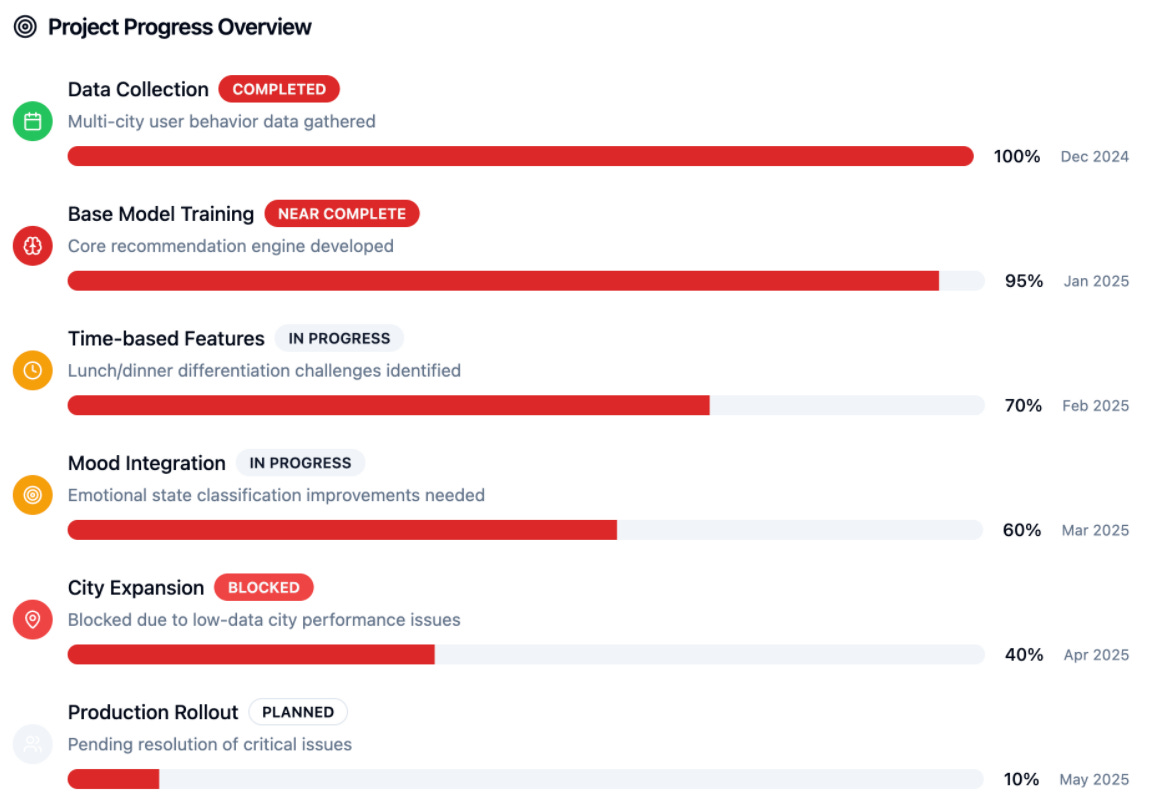

Development Progress Reviews

MVP Review

UAT & Feedback Loop

Preproduction Review

Hypothetical Case Study: CRED’s Fraud Detection Experimentation

Set Boundaries Early

Balance Accuracy with Maintainability

Development Traps to Avoid

Resume-, Chaos-, Prayer-Driven Development

Feature/Test-Driven Alternatives

Summary

Introduction

Success in machine learning is rarely defined by the sophistication of the algorithm alone. More often, projects fail because of miscommunication between teams, loosely defined objectives, or the absence of structured feedback loops. These issues can derail even technically sound models when deployed in real-world systems.

This article presents two hypothetical case studies, one involving Zomato and the other CRED. Both cases shed light on the subtle yet damaging operational traps that machine learning teams can fall into. The goal is to emphasize the importance of cross-functional collaboration, iterative development, and responsible ML practices throughout the lifecycle.

2) Hypothetical Case Study: Zomato’s Personalized Dish Recommender

Zomato aimed to personalize dish recommendations based on time, location, and user mood. The core challenge wasn’t building the model it was aligning multiple teams and keeping feedback loops tight. Communication-first practices made the biggest difference in delivery.

2.1) Experimental Update Meetings

"Is our dish recommender even working?"

In the early stages of the project, meetings remained high-level and exploratory. The team focused on identifying major pain points, such as customers confusing lunch and dinner menus or noticing underperformance in tier-2 cities.

This approach helped surface edge cases early on, allowing the team to adjust direction before investing heavily. It ensured that real-world issues were addressed before deeper execution began.

✅ Lesson learned

Surface edge cases early.

Focus first on usability, not model depth.

2.2) SME & Prototype Reviews

"Would a food lover find this useful?"

The product and marketing teams got involved to challenge how dishes were categorized and whether they truly resonated with users. They discussed grouping dishes by cuisine type and clearly distinguishing between snacks and full meals to reduce confusion and improve user experience.

Although a CNN-based classification model was explored as a potential solution, the team ultimately decided against it. The priority was to keep the minimum viable product (MVP) lightweight and fast, avoiding complexity in the early stage.

✅ Lesson learned

Align feasibility with business needs.

Prioritize fast feedback over fancy models.

2.3) Development Progress Reviews

"Is this tasty enough to ship?"

Progress was shared with before-and-after user flows, not just metrics. For instance, visually similar paneer dishes were merged using taste embeddings, improving experience.

✅ Lesson learned

Show the impact on user experience.

Go beyond metrics, highlight workflow wins.

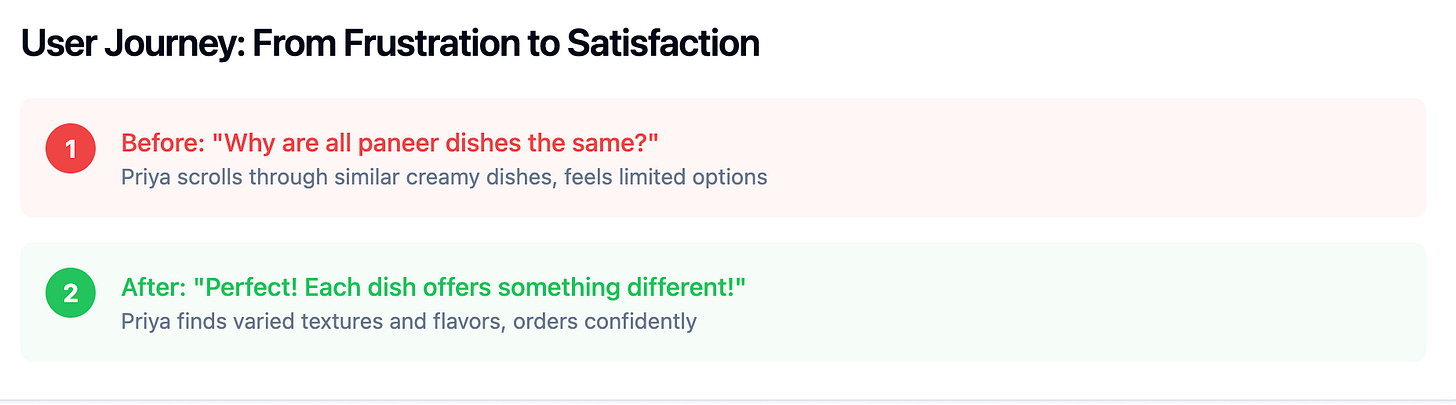

2.4) MVP Review

"Does this pass the hunger test?"

Zomato brought non-technical teams into the user acceptance testing (UAT) process to ensure a well-rounded perspective. Rather than addressing isolated complaints, they focused on identifying consistent feedback patterns that signaled deeper issues.

For example, repeated confusion over similar dish names pointed to a broader usability problem. The team used these insights to fine-tune the product, making it more intuitive and user-friendly.

✅ Lesson learned

Normalize feedback from diverse users.

Trends matter more than isolated outliers.

2.5 UAT & Feedback Loop

"Too many biryani suggestions?"

Users were more interested in practical filters such as spice level and preparation time rather than just intelligent dish predictions. This insight helped the team prioritize usability over complexity.

To improve the experience, A/B testing was used to refine dish rankings. Factors like user history and time of day were leveraged to personalize results in a way that felt natural and helpful.

✅ Lesson learned

Simple UX improvements often outperform deeper model tweaks.

Listening to user needs boosts adoption.

2.6) Preproduction Review

"Did we deliver what we promised or oversell?"

Before deployment, Zomato reviewed feature consistency across mobile, web, and widgets. Small gaps were found in how dishes ranked on different platforms. Fixes were made to align with initial goals.

✅ Lesson learned

Ship only when behavior matches the original vision.

Cross-platform consistency builds trust.

3) Hypothetical Case Study: CRED’s Fraud Detection Engine

CRED set out to detect high-value frauds in card transactions. With strict deadlines and real-world impact, they started small and moved fast. The approach balanced innovation with stability.

3.1) Set Boundaries Early

"Don't boil the ocean before one cup.

The team narrowed their focus to late-night, high-value transactions originating from unfamiliar devices. This targeted approach allowed them to address the riskiest scenarios first with greater precision.

To keep the system lightweight and easy to evaluate, they used just three features: timestamp, device fingerprint, and user history. This balance of simplicity and relevance made early iterations more effective.

✅ Lesson learned

Smaller scopes lead to faster and safer iteration.

Less is more when risk is high.

3.2) Balance Accuracy with Maintainability

"Will this still run clean next quarter?"

Initially, the team experimented with LSTMs to handle sequential patterns in the data. However, debugging challenges and long training times made iteration difficult and slowed progress.

To simplify development and gain clearer insights, they moved to random forests. Though this came with a slight drop in performance, it offered better transparency and faster tuning.

✅ Lesson learned

Prioritize models that scale well in real-world ops.

Clarity and stability often beat complexity.

4) Development Traps to Avoid

Even strong teams fail when they fall into undisciplined ML development patterns.

4.1) Resume-Driven Development (RDD)

Intern builds a GAN to simulate fraud data.

Looked flashy but:

Uninterpretable

Impossible to debug

No path to deployment

✅ Lesson: Complexity ≠ Value. Solve the real problem, not your resume's.

4.2) Chaos-Driven Development (CDD)

A developer deployed untested fraud detection logic directly to production. Without proper safeguards, this led to widespread issues.

Legitimate users were mistakenly blocked, payments failed, and the platform saw a noticeable drop in user trust and retention.

✅ Lesson: Always prototype offline. Production ML needs real QA discipline.

4.3) Prayer-Driven Development (PDD)

Engineer copied an autoencoder from a blog.

It flagged normal users, missed real fraud.

No understanding of underlying data dynamics.

✅ Lesson: Blog code ≠ production-ready. Validate with your own data.

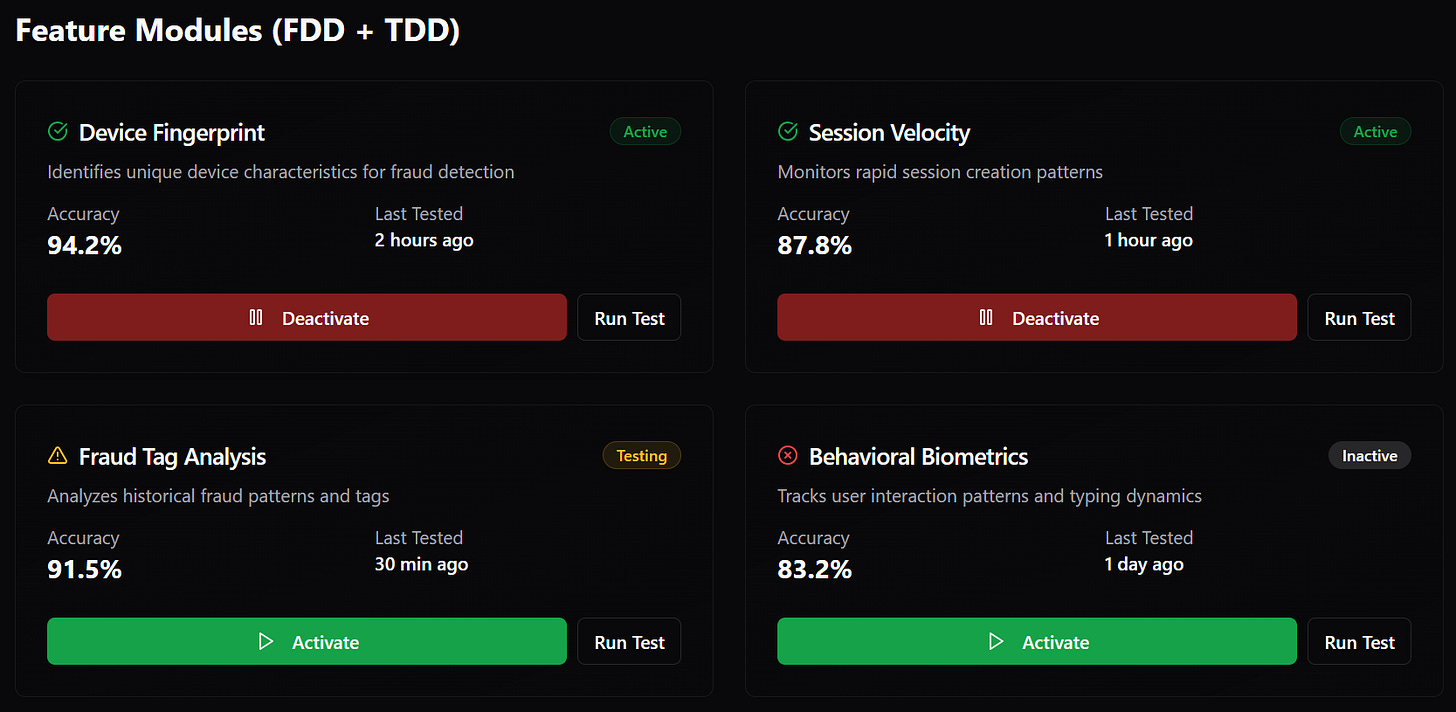

4.4) Feature + Test Driven Development (FDD + TDD)

CRED’s team found success through a structured and modular approach.

They first identified key fraud signals like session frequency and device anomalies. Each component was built to be testable, and instead of deploying a complex system all at once, they added model layers gradually for better control and evaluation.

✅ Lesson: ML is just software, make it modular, testable, and incremental.

5) Summary

Building machine learning systems is not just about picking the best model, but about aligning teams, setting realistic goals, and solving the right problem. Whether it’s Zomato refining dish recommendations or CRED spotting fraud, both focused on tight feedback loops and practical delivery.

These case studies highlight that successful ML projects thrive on collaboration, clear scope, and production readiness. It is the discipline in execution, not the complexity of the model, that ultimately drives real-world impact.

To understand these concepts , attaching video link

In the next article we will discuss about practically implementing this ideologies in production.