𝗚𝗼𝗼𝗴𝗹𝗲’𝘀 𝗚𝗲𝗺𝗺𝗮 𝟯: 𝗔 𝗚𝗮𝗺𝗲-𝗖𝗵𝗮𝗻𝗴𝗲𝗿 𝗶𝗻 𝗱𝗶𝘀𝘁𝗶𝗹𝗹 𝗔𝗜 𝗠𝗼𝗱𝗲𝗹𝘀 -- 𝗥𝘂𝗻𝗻𝗶𝗻𝗴 𝗟𝗼𝗰𝗮𝗹𝗹𝘆

𝗚𝗼𝗼𝗴𝗹𝗲’𝘀 𝗚𝗲𝗺𝗺𝗮 𝟯: 𝗔 𝗚𝗮𝗺𝗲-𝗖𝗵𝗮𝗻𝗴𝗲𝗿 𝗶𝗻 𝗱𝗶𝘀𝘁𝗶𝗹𝗹 𝗔𝗜 𝗠𝗼𝗱𝗲𝗹𝘀 -- 𝗥𝘂𝗻𝗻𝗶𝗻𝗴 𝗟𝗼𝗰𝗮𝗹𝗹𝘆

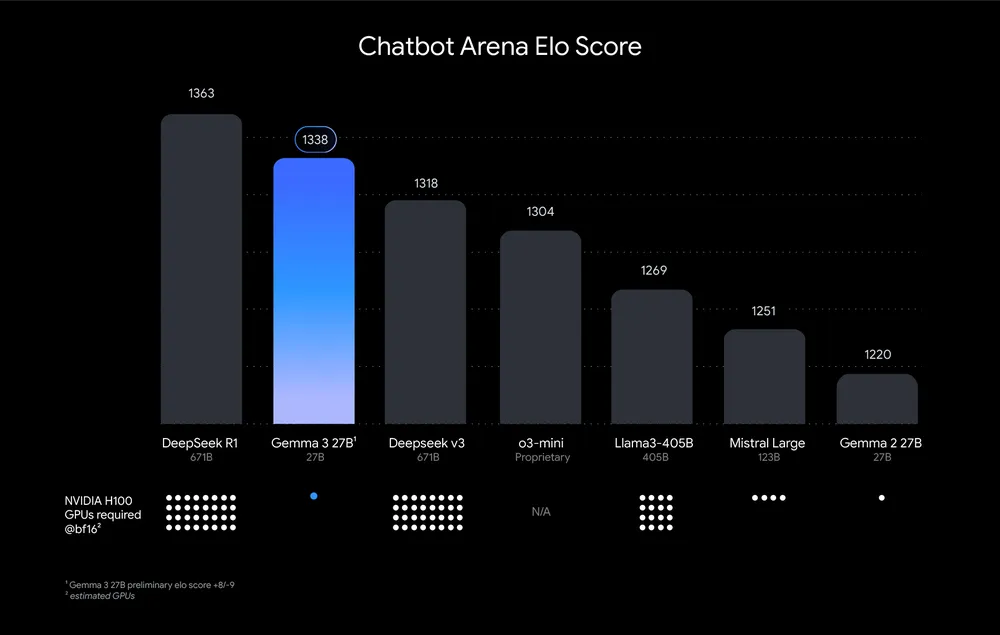

Google's recent launch of 𝗚𝗲𝗺𝗺𝗮 𝟯 has set a new benchmark for efficiency in AI. The 𝟭𝗕 𝗽𝗮𝗿𝗮𝗺𝗲𝘁𝗲𝗿 𝗺𝗼𝗱𝗲𝗹 is particularly impressive, delivering exceptional speed and highly detailed responses, even when running locally on a MacBook with just 8GB of RAM.

Gemma's pre-training and post-training processes were optimized using a combination of 𝗱𝗶𝘀𝘁𝗶𝗹𝗹𝗮𝘁𝗶𝗼𝗻, 𝗿𝗲𝗶𝗻𝗳𝗼𝗿𝗰𝗲𝗺𝗲𝗻𝘁 𝗹𝗲𝗮𝗿𝗻𝗶𝗻𝗴, and model merging. This approach results in enhanced performance in math, coding, and instruction following.

This breakthrough raises an important question: 𝘊𝘢𝘯 𝘸𝘦 𝘳𝘦𝘱𝘭𝘢𝘤𝘦 𝘈𝘗𝘐 𝘤𝘢𝘭𝘭𝘴 𝘸𝘪𝘵𝘩 𝘭𝘰𝘤𝘢𝘭𝘭𝘺 𝘥𝘦𝘱𝘭𝘰𝘺𝘦𝘥 𝘓𝘓𝘔𝘴? Many organizations restrict access to cloud-based AI models due to data security concerns, fearing sensitive information could be exposed to third parties like OpenAI or DeepSeek.

With Gemma 3 offering such remarkable performance at a smaller scale, local deployment becomes a viable alternative. Organizations could even scale up to the 27B parameter version for enhanced capabilities—all while maintaining data privacy.

As AI continues to evolve, 𝗰𝗼𝘂𝗹𝗱 𝗹𝗶𝗴𝗵𝘁𝘄𝗲𝗶𝗴𝗵𝘁 𝘆𝗲𝘁 𝗽𝗼𝘄𝗲𝗿𝗳𝘂𝗹 𝗺𝗼𝗱𝗲𝗹𝘀 𝗹𝗶𝗸𝗲 𝗚𝗲𝗺𝗺𝗮 𝟯 𝗿𝗲𝘀𝗵𝗮𝗽𝗲 𝗲𝗻𝘁𝗲𝗿𝗽𝗿𝗶𝘀𝗲 𝗔𝗜 𝗮𝗱𝗼𝗽𝘁𝗶𝗼𝗻?

For more AI and machine learning insights, explore 𝗩𝗶𝘇𝘂𝗿𝗮’𝘀 𝗔𝗜 𝗡𝗲𝘄𝘀𝗹𝗲𝘁𝘁𝗲𝗿: https://www.vizuaranewsletter.com/?r=502twn