Decision tree theory for all

Everything you need to know as a beginner

You might have heard (or seen people post on LinkedIn) that XGBoost is an incredibly good ML model that can solve complex industry level problems.

At its core, XGBoost consists of a bunch of decision trees.

But decision trees are so simple. Then how are they so impactful? How can they be used in complex problems? In fact decision trees are more important than convolutional neural networks in most industry level problems.

If you can pick and learn 3 things from ML, learn gradient descent, decision tree and XGBoost. You will be better that 99% of the folks out there.

Okay enough of the big picture stuff. Let us learn about decision trees in this article.

What is a decision tree?

A Decision Tree is a predictive model that maps decisions and their possible outcomes in a tree-like structure

It is used for both classification and regression tasks.

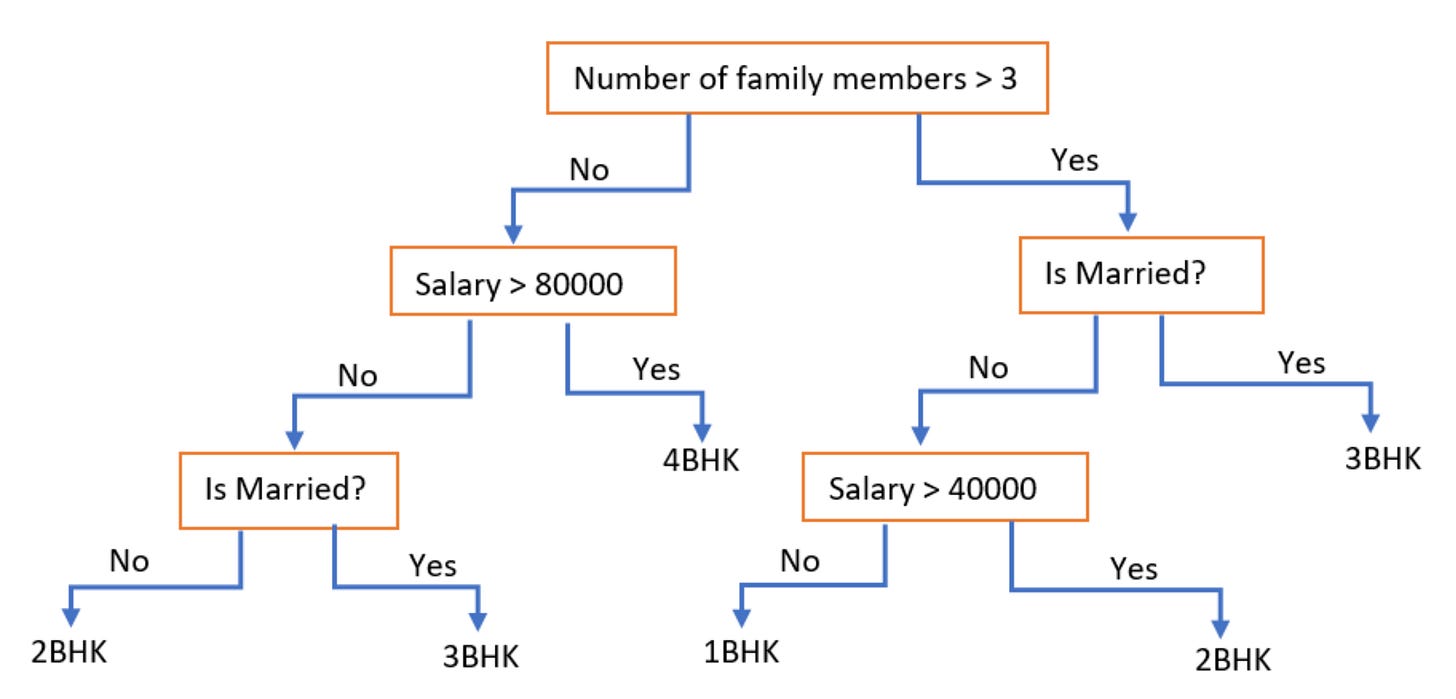

Imagine a bank wants to decide whether to approve a loan application.

Input features: Income, Credit Score, Age.

Output: Approve or Reject.

A decision tree helps split these features step by step to make the decision.

Structure of a decision tree

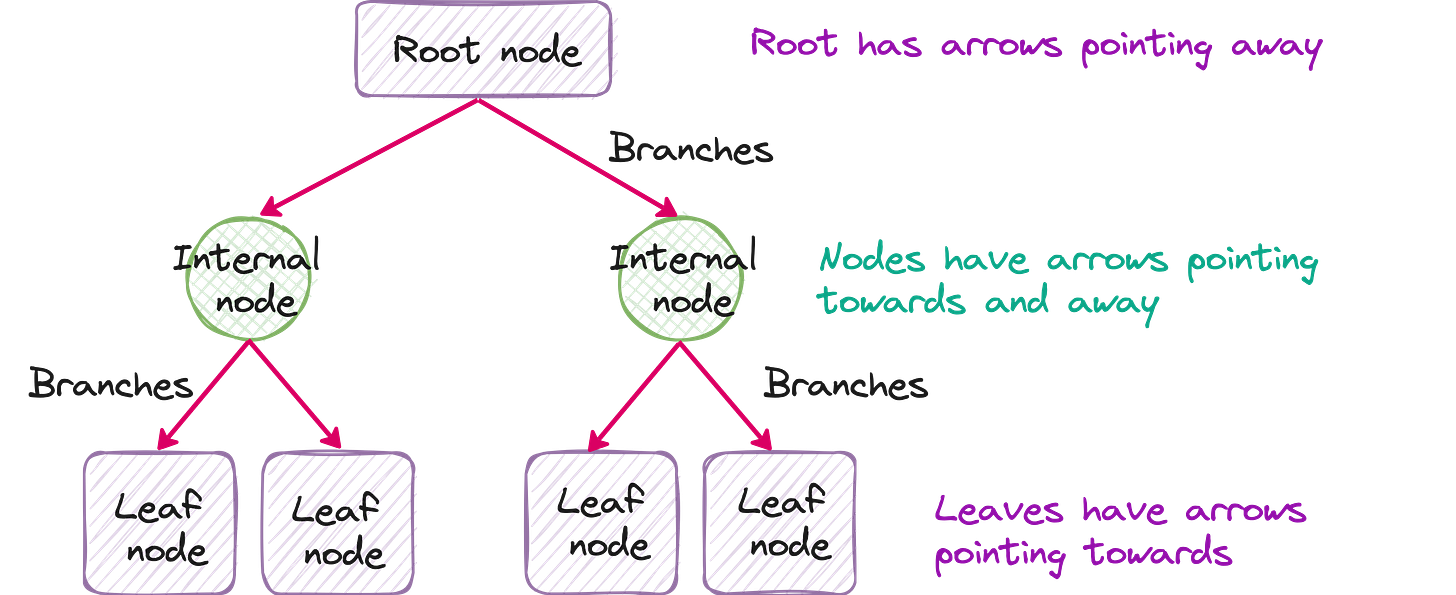

Normal tree has root, branches and leaves. Decision tree also has those. Except, decision tree is upside down. Root is at the top and leaves are at the bottom.

Root Node: Represents the entire dataset and is split into two or more homogeneous sets.

Internal Nodes: Represent decision points based on feature conditions.

Leaf Nodes: Represent the final output (class or value).

Let us solve a problem using decision tree

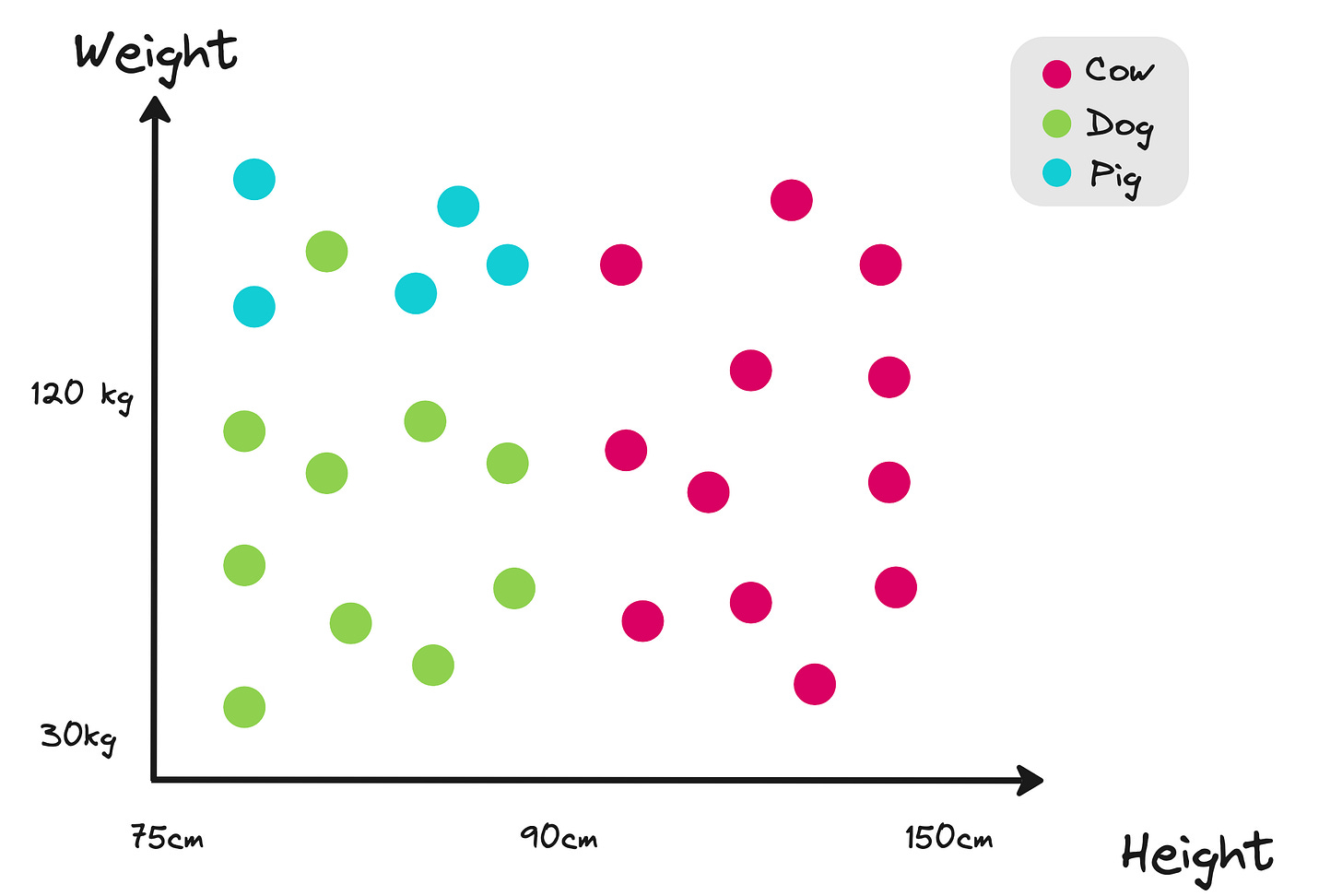

Say you want to classify animals into cow, dog or pig based on their height and weight.

At the root node you have all the data points. What feature will you use to create branches from the root node? Weight or height? What is the rationale? This is when entropy enters the pic.

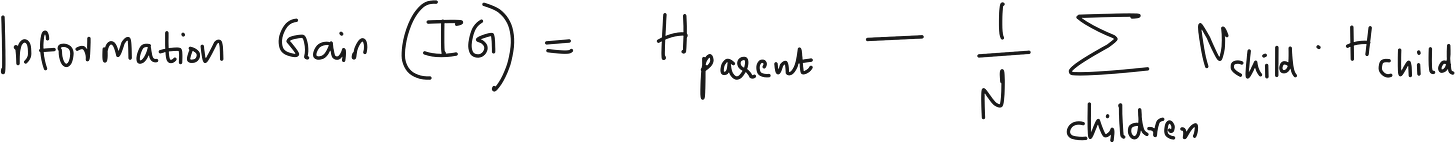

Entropy

Entropy measures the amount of information of some variable or event.

Information gain measures an amount the information that we gain. It does so using entropy.

Subtract from the entropy of our data before the split the entropy of each possible partition thereafter.

We then select the split that yields the largest reduction in entropy, or equivalently, the largest increase in information.

Our goal is to maximize the information gain or minimize the entropy while splitting the root node to 2 branches.

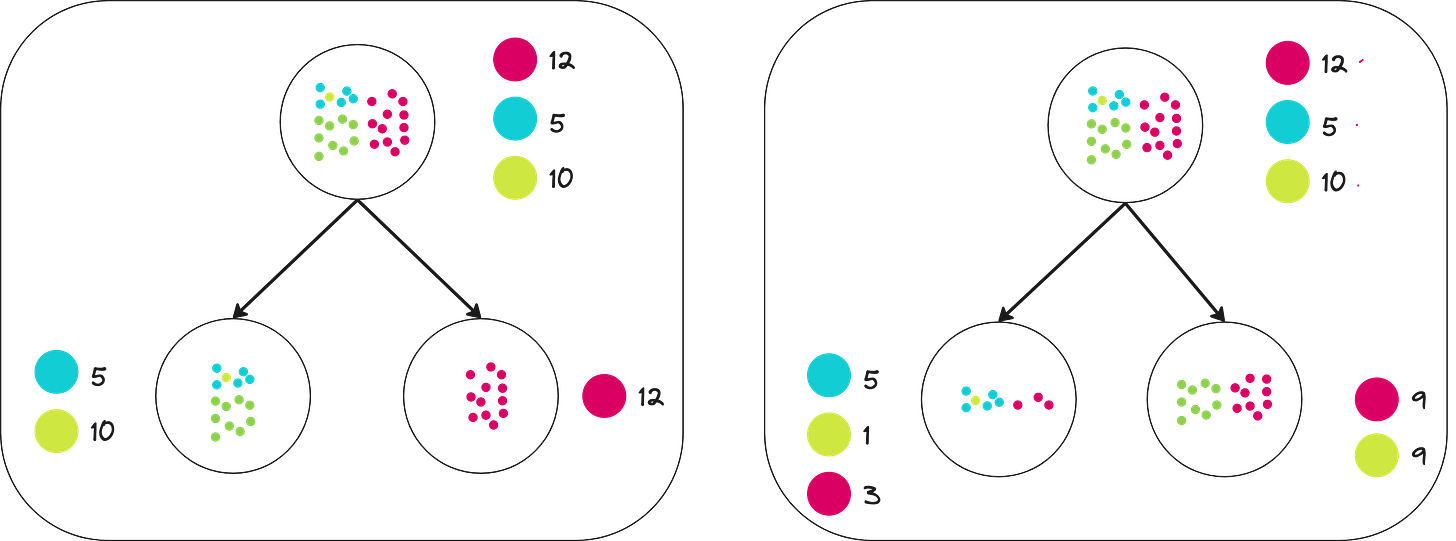

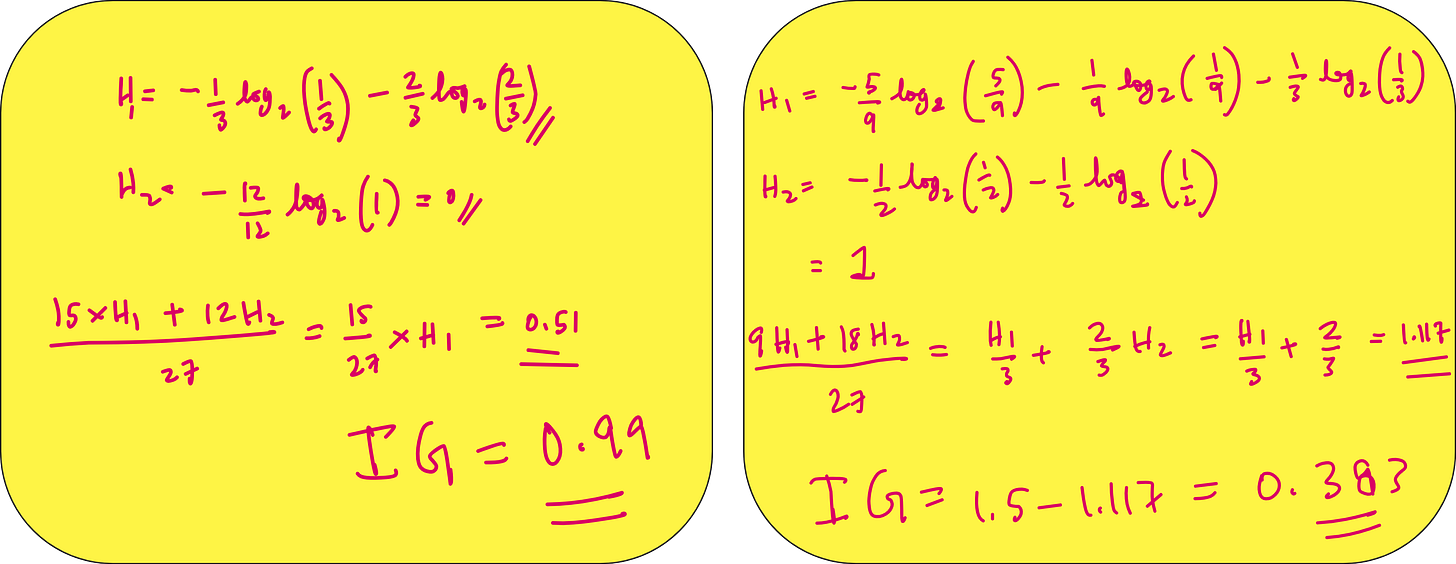

Which of these splits is better?

Consider splitting using one of the 2 conditions.

Weight < 120kg

Height > 90cm

Which one is a better choice?

You decide based on entropy. Whichever split results in maximum entropy reduction, you go with that.

So let us calculate entropy reduction or information gain in both cases.

The information gain is higher when you split like what is shown on the left. So you go ahead with that.

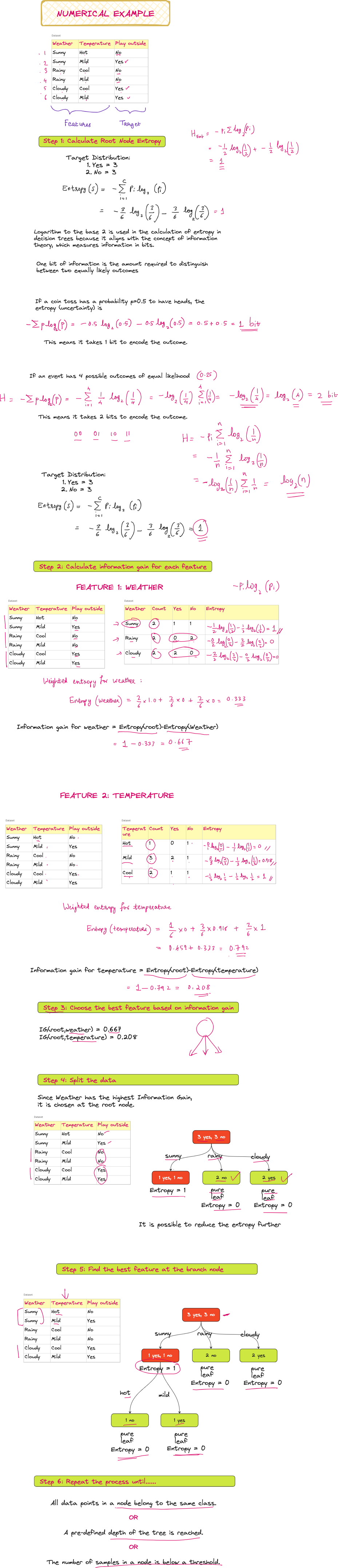

Steps involved in building a decision tree

Split the Data: At each node, the dataset is split based on a feature that provides the best entropy reduction

Recursively repeat the process continues until one of following is met:

All data points in a node belong to the same class.

A pre-defined depth of the tree is reached.

The number of samples in a node is below a threshold.

Decision tree is all about nodes. Your thought starts from the root node. Then you decide what feature and what threshold you want to use to split the root note to 2 or more children. If any child node contain only 1 category or class from dataset, that is a pure leaf. Once you have pure leaf for a node, you don’t split that node. Ideally, if overfitting was not a problem, then you goal is to convert all leaves into pure leaves.

Pure leaves have zero entropy and zero Gini impurity. We will explore Gini impurity in more detail in another article.

MLU decision tree

I will be failing in my duty to teach you decision tree if I don’t you you this gif recorded from MLU: https://mlu-explain.github.io/decision-tree/

Basically you need a good tree that can learn general pattern. Not a great tree that beats entropy to death (aka entropy = zero) and learn all the noise in the training data and scores utter poorly in testing data.

I have recorded a full-blown lecture on this topic for Vizuara’s YouTube channel. Do check this out: