Contrastive learning for creating embedding models

Learn the basics of contrastive learning

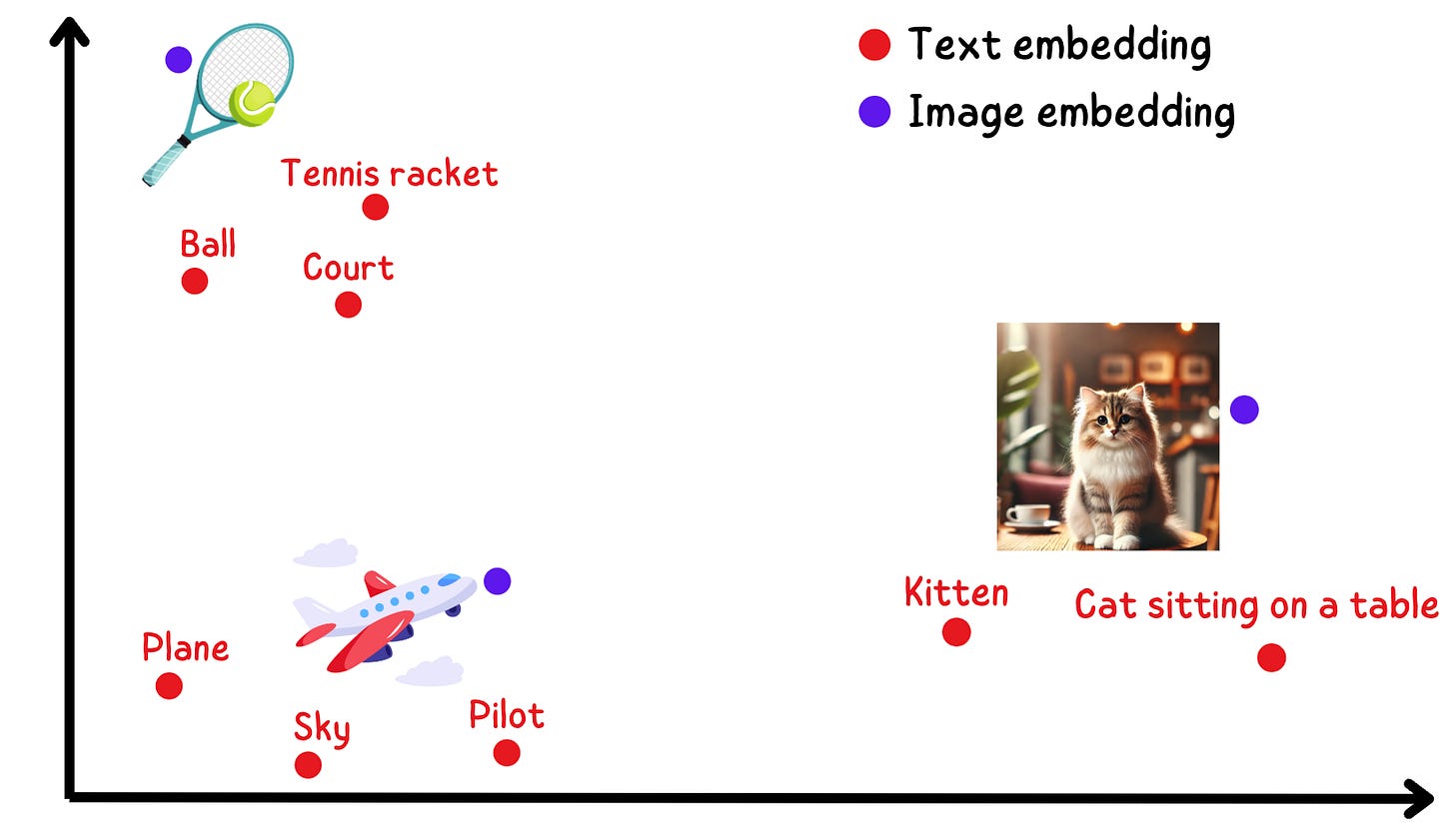

1. Embeddings

The process of converting text or images into numerical representations is referred to as “embedding” the input.

The resulting numerical representations are called as embeddings.

The reason we need embeddings is because computers cannot directly understand images or text.

We need images or text in a numerical format so that the computer/AI can understand them.

Embeddings serve as a bridge between the text/image and it’s numerical representation.

These numerical representations happen to be vectors.

2. Good vs Bad embeddings

Creating embeddings is not as simple as taking images or text and randomly creating vectors out of them.

Why?

One way to think of embeddings is a compressed representation of the underlying image or text.

If that’s the case, then the embeddings or the vectors should carry some information of what the image or text represents semantically.

The embeddings for “my cat is sick” and “that is a cute kitten” need to be “closer” to each other.

“The ship is sinking” and “the lizard climbs up the wall” have no semantic similarity, and their embeddings should be “farther” from each other.

The notion of “closer” and “farther” in vector space is quantified by metrics like euclidean distance and cosine similarity.

Thus, good embeddings satisfy the following:

Embeddings of similar text/images need to be “closer” in the vector space.

Embeddings of dissimilar text/images need to be “farther” in the vector space.

3. Contrastive learning

Contrastive learning is the methodology which helps us to create “good” embeddings.

The aim of contrastive learning is to train an embedding model such that similar text/images are close in vector space and dissimilar text/images are farther in vector space.

Why did I mark “and dissimilar text/images are farther in vector space” in bold?

Because that’s the main novelty of contrastive learning.

The training objective of contrastive learning is constructed such that the loss between similar text/images is minimized and the loss between dissimilar text/images is maximized.

That’s why it’s called “contrastive” learning.

There is a contrast within the training objective: we are minimizing something and maximizing something else at the same time.

4. Why is “contrast” so important?

Because, more often than not, we get more information when a question is framed as a contrast.

Let’s consider two examples from the book “Hands on Large Language Models” (Link).

Text based example:

Let’s take an example of a robber who has robbed a bank.

You ask the following question to the robber:

Why did you rob the bank?

The robber says: Because that’s where the money is.

This is not informative at all. The main intent of our question was what made him “rob” a bank? Why did he do the act of robbing a bank?

Instead, if you frame the question as:

Why did you rob a bank instead of obeying the law?

Then the robber may say: Because I am poor and I needed quick money.

You see what happened there?

We introduced contrast in the question: instead of obeying the law

The moment we introduced contrast, we got a much more informative answer.

Image based example:

You show the kid an image of a horse and ask “why is this a horse”?

The kid may say: the horse has 4 legs, a tail, it runs very fast and it has fur.

It’s a factually correct answer, but there is one more animal which has exactly the same features.

That animal is a zebra.

Although the kid’s answer was factually correct, it could not distinguish the horse from a zebra.

Instead, if we ask “why is this a horse and not a zebra”

Then the kid thinks and says: the horse does not have stripes. Horses have short ears and a long mane.

You see what happened there?

We introduced a contrast in the question again: and not a zebra

Again, we get more information when we pose the question as a contrast.

This is exactly the same with embedding models:

Instead of asking the model to only look for features which make two texts or two images similar (two horses for example), we should also ask the model to look for features which make two texts or two images dissimilar (a horse and a zebra for example).

By providing the contrast between two concepts, the model starts to learn the features that define the concept but also the features that are not related.

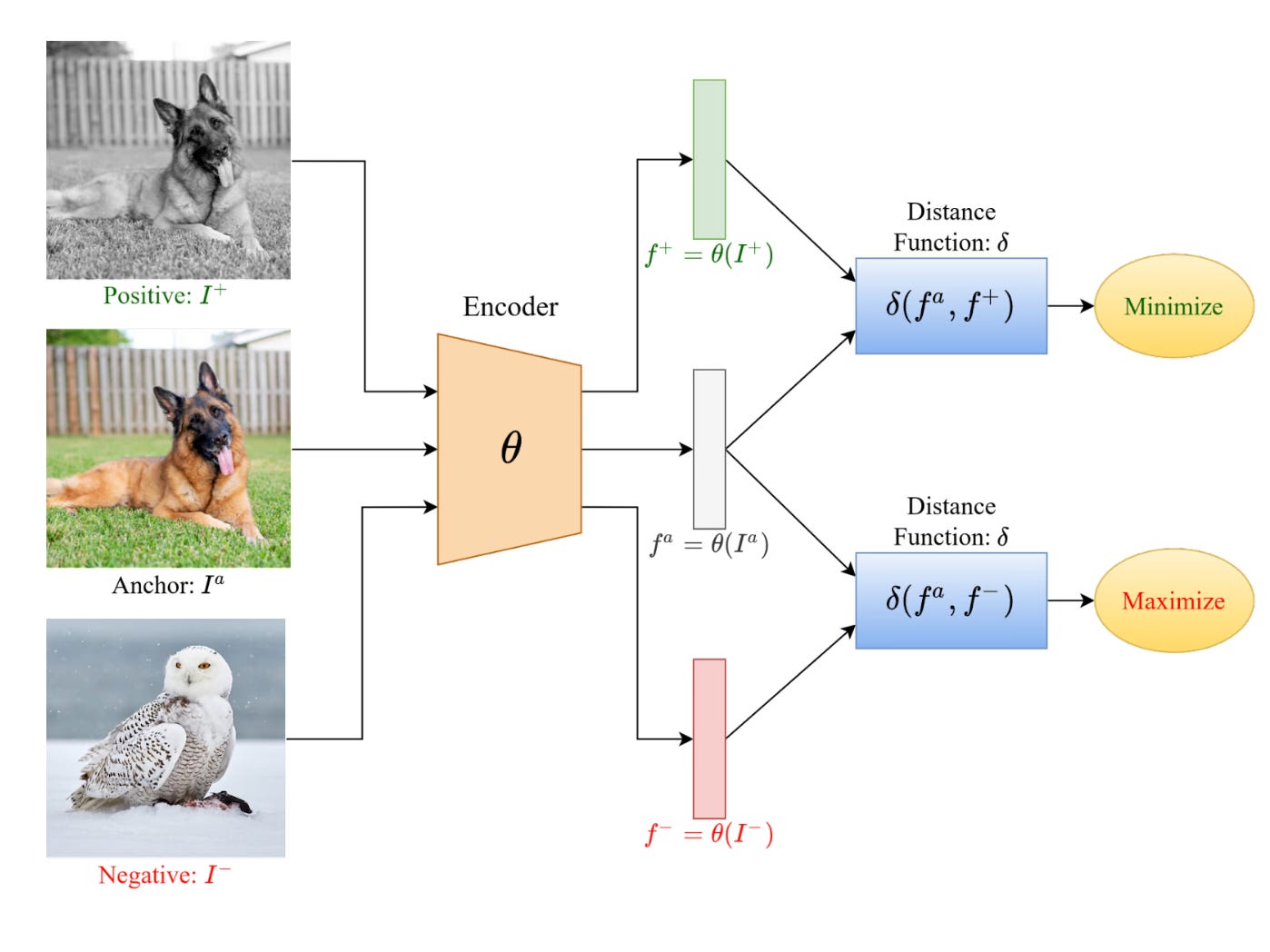

5. The training procedure in contrastive learning

Contrastive learning starts with an anchor text or image.

We then find positive samples related to the anchor.

We also find negative samples related to the anchor.

The whole goal during the training procedure is to minimize the vector distance between the positive samples-anchor and maximize the vector distance between the negative samples-anchor.

This is done using various types of loss functions such as:

(a) Contrastive Loss

Contrastive loss minimizes the embedding distance between samples of the same class and maximizes the distance for samples from different classes, encouraging a clear separation in the learned embedding space. A margin hyperparameter ensures a minimum separation distance for dissimilar pairs.

(b) Triplet Loss

Triplet loss uses an anchor sample, a positive sample (same class), and a negative sample (different class) to simultaneously minimize the distance between the anchor and positive, and maximize the distance between the anchor and negative. A margin parameter defines the minimum distance offset between similar and dissimilar pairs.

(c) Lifted Structured Loss

Lifted structured loss utilizes all pairwise sample distances within a training batch, optimizing embeddings to reduce intra-class distance while increasing inter-class separation. It includes a hard negative mining term to focus on difficult negative samples, which is smoothed to improve convergence.

(d) N-pair Loss

N-pair loss extends triplet loss by comparing an anchor-positive pair against multiple negative samples in the same training step. This generalization improves robustness by considering diverse negative examples and can be equivalent to softmax loss when using one negative sample per class.

6. Dealing with less training data

One advantage of contrastive learning is that we don’t need too much training data or even labelled data for that matter.

Let me illustrate this through images.

For each anchor image, you can always create positive samples through data augmentation such as:

Rotating the anchor image

Blurring the anchor image

Changing the brightness/contrast of the anchor image

All other images in the dataset will be negative samples.

That way, contrastive learning works very well even with less training data as well as non-annotated training data.

Thanks for reading!

I hope you intuitively understand and appreciate the concept of contrastive learning now.

I have really enjoyed the article. I like the analogy you used in the article and how simply you explained contrastive learning. Great article