Blinkit Promised 9 Minutes. Then It Started Raining

How grocery delivery reveals the real gaps in real-time ML. From weather to rider delays, distance alone doesn’t deliver.

Table of Contents

Introduction: Understanding the Roots of ML Project Failure

Business-First Thinking - The Sponsored Product Paradigm

Defining Direction Through Stakeholder Conversations

Blinkit : A Real-World Case Study

Demonstrations as a Feedback Engine in the ML Lifecycle

The Distinction Between Planning and Scoping in ML Projects

Experimental Scoping and Timeboxing

Idea Boards - Structuring Thought for Smarter Strategy

The Weighted Matrix for an Unbiased Decisions

Conclusion - Aligning Technical Delivery with Business Impact

1. Introduction : Understanding the Roots of ML Project Failure

Most machine learning (ML) projects don’t fail because of bad algorithms or dirty data. They fail because they try solving the wrong problem or solve it the wrong way. In the real world, especially in industries like e-commerce, logistics, and retail, success is determined by alignment with business needs and rapid iterative feedback, not model complexity.

Imagine building a model that predicts which fashion item a user might buy but failing to account for which items generate higher business revenue. The result? Technically correct, but commercially useless. That’s the gap we aim to close.

2. Business-First Thinking | The Sponsored Product Paradigm

Take the example of Amazon. When you search for a product, why is a "Sponsored" label shown at the top?

It’s because businesses pay to push their listings higher, regardless of their ratings. From a user's perspective, this may seem odd. But for Amazon, it’s a critical revenue stream.

Technical takeaway: As an ML engineer, your recommender system must prioritize these items as per business rules. It’s not just about the best model accuracy; it’s about serving agreed-upon deals.

Real-life analogy: Think of a school canteen. The food supplier who sponsors school events may get their food displayed at the front of the line. It’s not about taste alone, it’s about the relationship.

3. Defining Direction Through Stakeholder Conversations

Let’s say the product team says, “Show trending items to Gen Z.” What does that mean in technical terms?

Is "trending" based on social media mentions?

Is it based on recent purchases?

How do we categorize "Gen Z" behaviorally?

These require clarifying discussions. Gen Z trends may emerge from Instagram reels or influencer endorsements. As an ML engineer, you need to convert these qualitative insights into quantitative features.

Tip: Don't jump into building. First, ask: “How do you define trend?”

4. Blinkit : A Real-World Case Study

Imagine you're working with a grocery delivery app like Blinkit.

Goal: Predict the estimated delivery time.

You build a model that calculates distance between shop and destination = 9 mins. You demo this.

Stakeholders ask:

What if it rains?

What about traffic congestion?

What if no delivery rider is available?

These are real-time variables your first model ignored.

Real-life analogy: Booking a cab without checking traffic. The map says 10 mins, but in rain it’s 20. Business sees this as a failure to meet user expectations.

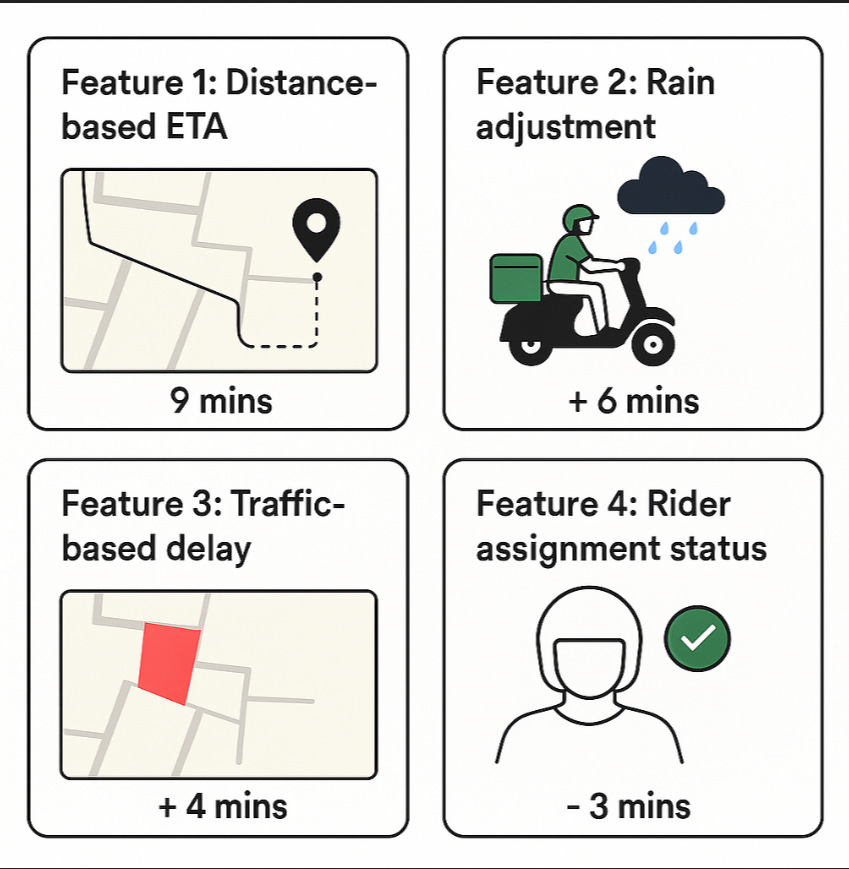

Solution: Break features down.

Feature 1: Distance-based ETA

Feature 2: Rain adjustment

Feature 3: Traffic-based delay

Feature 4: Rider assignment status

Show these as separate demos and collect feedback each time.

5. Demonstrations as a Feedback Engine in the ML Lifecycle

Frequent demos are not optional; they’re essential. Think of them as checkpoints where misalignment can be corrected before it gets costly.

Why demos work:

They show tangible progress.

They allow stakeholders to test your logic.

They expose gaps in understanding.

They avoid the “black box” syndrome.

Example: For a fashion recommender, don’t just show top-5 recommendations with NDCG scores. Let a PM search for “blue sneakers under ₹3000” and evaluate what appears.

Real-life analogy: You wouldn’t approve house construction without reviewing blueprints and regular site checks. Similarly, ML needs stakeholder check-ins.

6. The Distinction Between Planning and Scoping in ML Projects

Planning answers the “when” and “how.” It defines milestones, assigns team responsibilities, and sets timelines for each phase of the project.

Scoping tackles the “what” and “why.” It sets clear boundaries—what goes into the MVP, what data is needed, and what’s technically realistic within constraints.

Without proper scoping, teams chase too many features and risk burning time on non-essentials.

Real-life analogy: Scoping is deciding you want a 2BHK with a balcony. Planning is aligning timelines with the builder to make it happen.

7. Experimental Scoping and Timeboxing

Innovation without constraint leads to chaos. Timeboxing your exploration keeps your team from falling into “research rabbit holes.”

Approach:

Week 1: Research and shortlist 3 viable models.

Week 2: Build and evaluate quick prototypes.

Week 3: Review and demo to stakeholders.

This tight loop lets you fail fast, learn fast, and pivot wisely.

Real-life analogy: Testing dishes for a menu, you don’t perfect every recipe. You test, taste, refine, and decide.

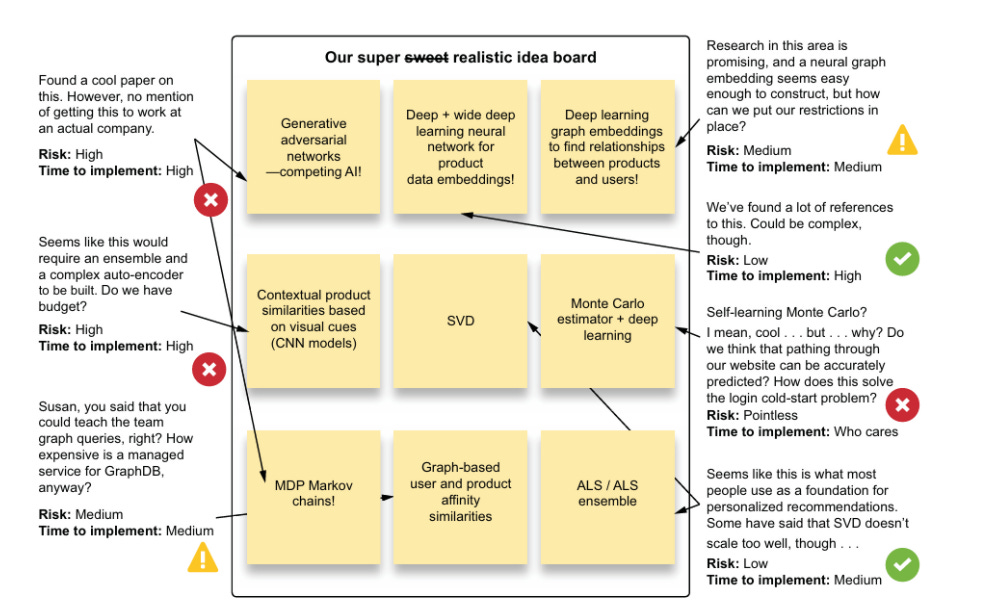

8. Idea Boards : Structuring Thought for Smarter Strategy

Use idea boards to align the whole team on what’s possible, feasible, and worth doing.

Steps:

Brainstorm solutions and write each on a sticky note.

Evaluate each idea based on:

Risk (High/Med/Low)

Time to Implement (Fast/Slow)

Cost and feasibility

Tag, prioritize, and focus on the low-risk, high-impact quadrant.

Source: Machine Learning in Action by Ben Wilson

Real-life analogy: Planning a group trip by voting on destinations, travel time, and budget, then picking the best match

.

9. The Weighted Matrix for an Unbiased Decisions

A weighted matrix eliminates bias when multiple approaches seem equally good.

How it works:

Assign weights to key decision criteria (e.g., accuracy, scalability, dev time).

Score each model.

Multiply and compare.

Real-life analogy: Think of choosing a city to relocate for work. City X has the highest salary, but City Y has better healthcare, lower rent, and good schools. The weighted matrix helps you see the whole picture instead of just chasing the biggest paycheck.

By introducing a weighted matrix, you eliminate guesswork and avoid heated debates. You give structure to decision-making. Everyone from engineers to executives gets clarity on why a particular model was chosen.

A weighted matrix eliminates bias when multiple approaches seem equally good.

10. Conclusion - Aligning Technical Delivery with Business Impact

Your model may work in a notebook, but that’s not enough. Success means:

It solves the right problem.

It scales and survives scrutiny.

It gets used by real users.

To build real impact:

Validate assumptions with stakeholders.

Timebox your experiments.

Demo often and early.

Make decisions using frameworks, not ego.

Remember, even the smartest algorithm means nothing if it’s never deployed.

Great ML isn’t just technical. It’s practical. It’s trusted. It’s used.

To understand these concepts in detail feel free to check out our new video

In the next article we will discuss about how to validate MVP and how we can convert prototype into actual project .

Interested in learning AI/ML LIVE from us?

Check this out: https://vizuara.ai/live-ai-courses

Simple language!

Real life analogy!

Blinkit Case Study!

Everything is so neat and interesting to read and understand!

Thank You!