𝐁𝐮𝐢𝐥𝐝 𝐋𝐋𝐌𝐬 𝐟𝐫𝐨𝐦 𝐬𝐜𝐫𝐚𝐭𝐜𝐡

“ChatGPT” is everywhere—it’s a tool we use daily to boost productivity, streamline tasks, and spark creativity. But have you ever wondered how it knows so much and performs across such diverse fields? Like many, I've been curious about how it really works and if I could create a similar tool to fit specific needs. 🤔

To dive deeper, I found a fantastic resource: “Build a Large Language Model (From Scratch)” by Sebastian Raschka, which is explained with an insightful YouTube series “Building LLM from Scratch” by Dr. Raj Dandekar (MIT PhD). This combination offers a structured, approachable way to understand the mechanics behind LLMs—and even to try building one ourselves!

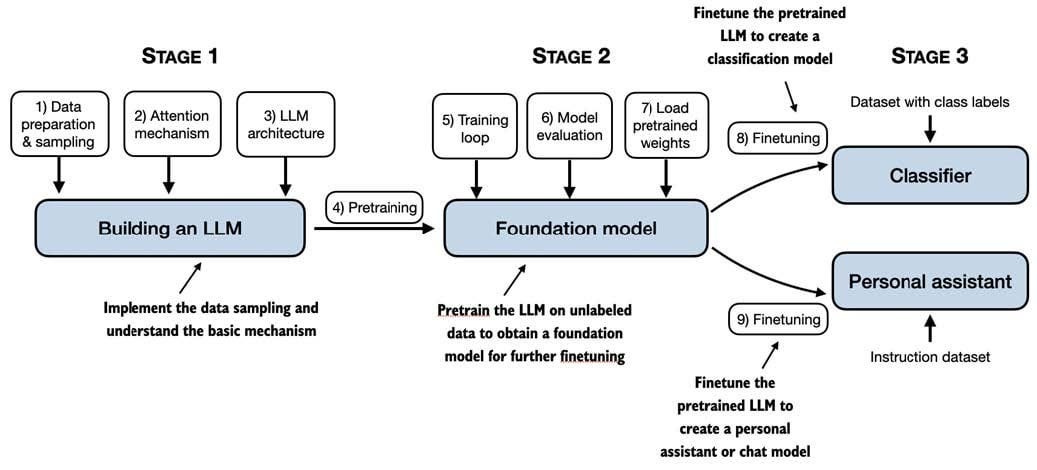

While AI and generative language models architecture shown in the figure can seem difficult to understand, I believe that by taking it step-by-step, it’s achievable—even for those without a tech background. 🚀

Learning one concept at a time can open the doors to this transformative field, and we at Vizuara.ai are excited to take you through the journey where each step is explained in detail for creating an LLM. For anyone interested, I highly recommend going through the following videos:

Lecture 1: Building LLMs from scratch: Series introduction

Lecture 2: Large Language Models (LLM) Basics

Lecture 3: Pretraining LLMs vs Finetuning LLMs

Lecture 4: What are transformers?

Lecture 5: How does GPT-3 really work?

Lecture 6: Stages of building an LLM from Scratch

Lecture 7: Code an LLM Tokenizer from Scratch in Python

Lecture 8: The GPT Tokenizer: Byte Pair Encoding

Lecture 9: Creating Input-Target data pairs using Python DataLoader

Lecture 10: What are token embeddings?

Lecture 11: The importance of Positional Embeddings

Lecture 12: The entire Data Preprocessing Pipeline of Large Language Models (LLMs)

Lecture 13: Introduction to the Attention Mechanism in Large Language Models (LLMs)

Lecture 14: Simplified Attention Mechanism - Coded from scratch in Python | No trainable weights

Lecture 15: Coding the self attention mechanism with key, query and value matrices

Lecture 16: Causal Self Attention Mechanism | Coded from scratch in Python

Lecture 17: Multi Head Attention Part 1 - Basics and Python code

Lecture 18: Multi Head Attention Part 2 - Entire mathematics explained

Lecture 19: Birds Eye View of the LLM Architecture

Lecture 20: Layer Normalization in the LLM Architecture

Lecture 21: GELU Activation Function in the LLM Architecture

Lecture 22: Shortcut connections in the LLM Architecture

Lecture 23: Coding the entire LLM Transformer Block

Lecture 24: Coding the 124 million parameter GPT-2 model

Lecture 25: Coding GPT-2 to predict the next token

Lecture 26: Measuring the LLM loss function

Lecture 27: Evaluating LLM performance on real dataset | Hands on project | Book data

Lecture 28: Coding the entire LLM Pre-training Loop

Lecture 29: Temperature Scaling in Large Language Models (LLMs)

Lecture 30: Top-k sampling in Large Language Models